LREC 2020 Workshop

Language Resources and Evaluation Conference

11–16 May 2020

WILDRE5– 5

th

Workshop on Indian Language

Data: Resources and Evaluation

PROCEEDINGS

Editors:

Girish Nath Jha, Kalika Bali, Sobha L, S. S. Agrawal, Atul Kr. Ojha

Proceedings of the LREC 2020

WILDRE5– 5

th

Workshop on Indian Language Data:

Resources and Evaluation

Edited by: Girish Nath Jha, Kalika Bali, Sobha L, S. S. Agrawal, Atul Kr. Ojha

ISBN: 979-10-95546-67-2

EAN: 9791095546672

For more information:

European Language Resources Association (ELRA)

9 rue des Cordelières

75013, Paris

France

http://www.elra.info

Email: [email protected]

c

European Language Resources Association (ELRA)

These workshop proceedings are licensed under a Creative Commons

Attribution-NonCommercial 4.0 International License

ii

Introduction

WILDRE – the 5

th

Workshop on Indian Language Data: Resources and Evaluation is being organized

in Marseille, France on May 16

th

, 2020 under the LREC platform. India has a huge linguistic diversity

and has seen concerted efforts from the Indian government and industry towards developing language

resources. European Language Resource Association (ELRA) and its associate organizations have been

very active and successful in addressing the challenges and opportunities related to language resource

creation and evaluation. It is, therefore, a great opportunity for resource creators of Indian languages

to showcase their work on this platform and also to interact and learn from those involved in similar

initiatives all over the world.

The broader objectives of the 5

th

WILDRE will be

• to map the status of Indian Language Resources

• to investigate challenges related to creating and sharing various levels of language resources

• to promote a dialogue between language resource developers and users

• to provide an opportunity for researchers from India to collaborate with researchers from other

parts of the world

The call for papers received a good response from the Indian language technology community. Out of

nineteen full papers received for review, we selected one paper for oral, four for short oral and seven for

a poster presentation.

iii

Workshops Chairs

Girish Nath Jha, Jawaharlal Nehru University, India

Kalika Bali, Microsoft Research India Lab, Bangalore

Sobha L, AU-KBC, Anna University

S. S. Agrawal, KIIT, Gurgaon, India

Workshop Manager

Atul Kr. Ojha, Charles University, Prague, Czech Republic & Panlingua Language Processing

LLP, India

Editors

Girish Nath Jha, Jawaharlal Nehru University, India

Kalika Bali, Microsoft Research India Lab, Bangalore

Sobha L, AU-KBC, Anna University

S. S. Agrawal, KIIT, Gurgaon, India

Atul Kr. Ojha, Charles University, Prague, Czech Republic & Panlingua Language Processing

LLP, India

iv

Programme Committee

Adil Amin Kak, Kashmir University

Anil Kumar Singh, IIT BHU, Benaras

Anupam Basu, Director, NIIT, Durgapur

Anoop Kunchukuttan, Microsoft AI and Research, India

Arul Mozhi, University of Hyderabad

Asif Iqbal, IIT Patna, Patna

Atul Kr. Ojha, Charles University, Prague, Czech Republic & Panlingua Language Processing

LLP, India

Bogdan Babych, University of Leeds, UK

Chao-Hong Liu, ADAPT Centre, Dublin City University, Ireland

Claudia Soria, CNR-ILC, Italy

Dafydd Gibbon, Universität Bielefeld, Germany

Daan van Esch, Google, USA

Dan Zeman, Charles University, Prague, Czech Republic

Delyth Prys, Bangor University, UK

Dipti Mishra Sharma, IIIT, Hyderabad

Diwakr Mishra, Amazon-Banglore, India

Dorothee Beermann, Norwegian University of Science and Technology (NTNU)

Elizabeth Sherley, IITM-Kerala, Trivandrum

Esha Banerjee, Google, USA

Eveline Wandl-Vogt, Austrian Academy of Sciences, Austria

Georg Rehm, DFKI, Germany

Girish Nath Jha, Jawaharlal Nehru University, New Delhi

Jan Odijk, Utrecht University, The Netherlands

Jolanta Bachan, Adam Mickiewicz University, Poland

Joseph Mariani, LIMSI-CNRS, France

Jyoti D. Pawar, Goa University

Kalika Bali, MSRI, Bangalore

Khalid Choukri, ELRA, France

Lars Hellan, NTNU, Norway

M J Warsi, Aligarh Muslim University, India

Malhar Kulkarni, IIT Bombay

Manji Bhadra, Bankura University, West Bengal

Marko Tadic, Croatian Academy of Sciences and Arts, Croatia

Massimo Monaglia, University of Florence, Italy

Monojit Choudhary, MSRI Bangalore

Narayan Choudhary, CIIL, Mysore

Nicoletta Calzolari, ILC-CNR, Pisa, Italy

Niladri Shekhar Dash, ISI Kolkata

Panchanan Mohanty, GLA, Mathura

Pinky Nainwani, Cognizant Technology Solutions, Bangalore

Pushpak Bhattacharya, Director, IIT Patna

Qun Liu, Noah’s Ark Lab, Huawei

Rajeev R R, ICFOSS, Trivandrum

v

Ritesh Kumar, Agra University

Shantipriya Parida, Idiap Research Institute, Switzerland

S.K. Shrivastava, Head, TDIL, MEITY, Govt of India

S.S. Agrawal, KIIT, Gurgaon, India

Sachin Kumar, EZDI, Ahmedabad

Santanu Chaudhury, Director, IIT Jodhpur

Shivaji Bandhopadhyay, Director, NIT, Silchar

Sobha L, AU-KBC Research Centre, Anna University

Stelios Piperidis, ILSP, Greece

Subhash Chandra, Delhi University

Swaran Lata, Retired Head, TDIL, MCIT, Govt of India

Virach Sornlertlamvanich, Thammasat University, Bangkok, Thailand

Vishal Goyal, Punjabi University, Patiala

Zygmunt Vetulani, Adam Mickiewicz University, Poland

vi

Table of Contents

Part-of-Speech Annotation Challenges in Marathi

Gajanan Rane, Nilesh Joshi, Geetanjali Rane, Hanumant Redkar, Malhar Kulkarni and Pushpak

Bhattacharyya . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

A Dataset for Troll Classification of TamilMemes

Shardul Suryawanshi, Bharathi Raja Chakravarthi, Pranav Verma, Mihael Arcan, John Philip Mc-

Crae and Paul Buitelaar . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

OdiEnCorp 2.0: Odia-English Parallel Corpus for Machine Translation

Shantipriya Parida, Satya Ranjan Dash, Ond

ˇ

rej Bojar, Petr Motlicek, Priyanka Pattnaik and Deba-

sish Kumar Mallick . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

Handling Noun-Noun Coreference in Tamil

Vijay Sundar Ram and Sobha Lalitha Devi . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

Malayalam Speech Corpus: Design and Development for Dravidian Language

Lekshmi K R, Jithesh V S and Elizabeth Sherly . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

Multilingual Neural Machine Translation involving Indian Languages

Pulkit Madaan and Fatiha Sadat . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

Universal Dependency Treebanks for Low-Resource Indian Languages: The Case of Bhojpuri

Atul Kr. Ojha and Daniel Zeman . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

A Fully Expanded Dependency Treebank for Telugu

Sneha Nallani, Manish Shrivastava and Dipti Sharma . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

Determination of Idiomatic Sentences in Paragraphs Using Statement Classification and Generalization

of Grammar Rules

Naziya Shaikh . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

Polish Lexicon-Grammar Development Methodology as an Example for Application to other Languages

Zygmunt Vetulani and Gra

˙

zyna Vetulani . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

Abstractive Text Summarization for Sanskrit Prose: A Study of Methods and Approaches

Shagun Sinha and Girish Jha . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

A Deeper Study on Features for Named Entity Recognition

Malarkodi C S and Sobha Lalitha Devi . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

vii

Workshop Program

Saturday, May 16, 2020

14:00–

14:45

Inaugural session

14:00–

14:05

Welcome by Workshop Chairs

14:05–

14:25

Inaugural Address

14:25–

14:45

Keynote Lecture

14:45–

16:15

Paper Session

Part-of-Speech Annotation Challenges in Marathi

Gajanan Rane, Nilesh Joshi, Geetanjali Rane, Hanumant Redkar, Malhar Kulkarni

and Pushpak Bhattacharyya

A Dataset for Troll Classification of TamilMemes

Shardul Suryawanshi, Bharathi Raja Chakravarthi, Pranav Verma, Mihael Arcan,

John Philip McCrae and Paul Buitelaar

OdiEnCorp 2.0: Odia-English Parallel Corpus for Machine Translation

Shantipriya Parida, Satya Ranjan Dash, Ond

ˇ

rej Bojar, Petr Motlicek, Priyanka Pat-

tnaik and Debasish Kumar Mallick

Handling Noun-Noun Coreference in Tamil

Vijay Sundar Ram and Sobha Lalitha Devi

Malayalam Speech Corpus: Design and Development for Dravidian Language

Lekshmi K R, Jithesh V S and Elizabeth Sherly

16:15–

16:25

Break

viii

Saturday, May 16, 2020 (continued)

16:25–

17:45

Poster Session

Multilingual Neural Machine Translation involving Indian Languages

Pulkit Madaan and Fatiha Sadat

Universal Dependency Treebanks for Low-Resource Indian Languages: The Case

of Bhojpuri

Atul Kr. Ojha and Daniel Zeman

A Fully Expanded Dependency Treebank for Telugu

Sneha Nallani, Manish Shrivastava and Dipti Sharma

Determination of Idiomatic Sentences in Paragraphs Using Statement Classification

and Generalization of Grammar Rules

Naziya Shaikh

Polish Lexicon-Grammar Development Methodology as an Example for Application

to other Languages

Zygmunt Vetulani and Gra

˙

zyna Vetulani

Abstractive Text Summarization for Sanskrit Prose: A Study of Methods and Ap-

proaches

Shagun Sinha and Girish Jha

A Deeper Study on Features for Named Entity Recognition

Malarkodi C S and Sobha Lalitha Devi

17:45–

18:30

Panel discussion

ix

Saturday, May 16, 2020 (continued)

18:30–

18:40

Valedictory Address

18:40–

18:45

Vote of Thanks

x

Proceedings of the WILDRE5– 5

th

Workshop on Indian Language Data: Resources and Evaluation, pages 1–6

Language Resources and Evaluation Conference (LREC 2020), Marseille, 11–16 May 2020

c

European Language Resources Association (ELRA), licensed under CC-BY-NC

Part-of-Speech Annotation Challenges in Marathi

Gajanan Rane, Nilesh Joshi, Geetanjali Rane, Hanumant Redkar,

Malhar Kulkarni and Pushpak Bhattacharyya

Center For Indian Language Technology

Indian Institute of Technology Bombay, Mumbai, India

{gkrane45, joshinilesh60, geetanjaleerane, hanumantredkar,

malharku and pushpakbh}@gmail.com

Abstract

Part of Speech (POS) annotation is a significant challenge in natural language processing. The paper discusses issues and

challenges faced in the process of POS annotation of the Marathi data from four domains viz., tourism, health, entertainment

and agriculture. During POS annotation, a lot of issues were encountered. Some of the major ones are discussed in detail in

this paper. Also, the two approaches viz., the lexical (L approach) and the functional (F approach) of POS tagging have been

discussed and presented with examples. Further, some ambiguous cases in POS annotation are presented in the paper.

Keywords: Marathi, POS Annotation, POS Tagging, Lexical, Functional, Marathi POS Tagset, ILCI

1 Introduction

In any natural language, Part of Speech (POS) such as

noun, pronoun, adjective, verb, adverb, demonstrative,

etc., forms an integral building block of a sentence struc-

ture. POS tagging

1

is one of the major activities in Natural

Language Processing (NLP). In corpus linguistics, POS

tagging is the process of marking/annotating a word in a

text/corpus which corresponds to a particular POS. The

annotation is done based on its definition and its context

i.e., its relationship with adjacent and related words in a

phrase, sentence, or paragraph. A simplified form of this

is commonly taught to school-age children, in the identifi-

cation of words as nouns, verbs, adjectives, adverbs, etc.

The term ‘Part-of-Speech Tagging’ is also known as POS

tagging, POST, POS annotation, grammatical tagging or

word-category disambiguation.

In this paper, the challenges and issues in POS tagging

with special reference to Marathi

2

have been presented.

The Marathi language is one of the major languages of

India. It belongs to the Indo-Aryan Language family with

about 71,936,894 users

3

. It is predominantly spoken in the

state of Maharashtra in Western India (Chaudhari et. al.,

2017). In recent years many research institutions and or-

ganizations are involved in developing the lexical re-

sources for Marathi for NLP activities. Marathi Wordnet

is one such lexical resource developed at IIT Bombay

(Popale and Bhattacharyya, 2017).

The paper is organized as follows: Section 2 introduces

POS annotation; section 3 provides information on Mara-

thi annotated corpora; section 4 describes Marathi tag set;

section 5 explains tagging approaches, section 6 presents

ambiguous behaviors of the Marathi words, section 7

presents a discussion on special cases, and section 8 con-

cludes the paper with future work.

1

http://language.worldofcomputing.net/pos-tagging/parts-of-

speech-tagging.html

2

http://www.indianmirror.com/languages/marathi-language.html

3

http://www.censusindia.gov.in/

2 Parts-Of-Speech Annotation

In NLP pipeline POS tagging is an important activity

which forms the base of various language processing ap-

plications. Annotating a text with POS tags is a standard

low-level text pre-processing step before moving to higher

levels in the pipeline like chunking, dependency parsing,

etc. (Bhattacharyya, 2015). Identification of the parts of

speech such as nouns, verbs, adjectives, adverbs for each

word (token) of the sentence helps in analyzing the role of

each word in a sentence (Jurafsky D. et. al., 2016). It

represents a token level annotation wherein it assigns a

token with POS category.

3 Marathi Annotated Corpora

Aim of POS tagging is to create a large annotated corpora

for natural language processing, speech recognition and

other related applications. Annotated corpora serve as an

important resource in NLP activities. It proves to be a ba-

sic building block for constructing statistical models for

the automatic processing of natural languages. The signi-

ficance of large annotated corpora is widely appreciated

by researchers and application developers. Various re-

search institutes in India viz., IIT Bombay

4

, IIIT Hydera-

bad

5

, JNU New Delhi

6

, and other institutes have devel-

oped a large corpus of POS tagged data. In Marathi, there

is around 100k annotated data developed as a part of In-

dian Languages Corpora Initiative (ILCI)

7

project funded

by MeitY

8

, New Delhi. This ILCI corpus consists of four

domains viz., Tourism, Health, Agriculture, and Enter-

tainment. This tagged data (Tourism - 25K, Health - 25K,

Agriculture - 10K, Entertainment - 10K, General – 30K)

is used for various applications like chunking, dependency

tree banking, word sense disambiguation, etc. This ILCI

4

http://www.cfilt.iitb.ac.in/

5

https://ltrc.iiit.ac.in/

6

https://www.jnu.ac.in/

7

http://sanskrit.jnu.ac.in/ilci/index.jsp

8

https://meity.gov.in/

1

annotated data forms a baseline for Marathi POS tagging

and is available for download at TDIL portal

9

.

4 The Marathi POS Tag-Set

The Bureau of Indian Standards (BIS)

10

has come up with

a standard set of tags for annotating data for Indian lan-

guages. This tag-set is prepared for Hindi under the guid-

ance of BIS. The BIS tag-set aims to ensure standardiza-

tion in the POS tagging across the Indian languages. The

tag sets of all Indian languages have been drafted by Dept.

of Information Technology, MeitY and presented as Uni-

fied POS standard in Indian languages

11

. Marathi POS

tag-set has been prepared at IIT Bombay referring to the

standard BIS POS Tag-set, IIIT Hyderabad guideline doc-

ument (Bharati et al, 2006) and Konkani Tag-set (Vaz et.

al., 2012). This Marathi POS Tag-set can be seen in Ap-

pendix A.

5 Lexical and Functional POS Tagging:

Challenges and Discussions

Lexical POS tagging (Lexical or L approach) deals with

tagging of a word at a token level. Functional POS tag-

ging (Functional or F approach) deals with tagging of a

word as a syntactic function of a word in a sentence. In

other words, a word can have two roles viz., grammatical

role (lexical POS w.r.t. a dictionary entry) and functional

role (contextual POS)

12

. For example, in the phrase ‘golf

stick’, the POS tag of the word ‘golf’ could be determined

as follows:

Lexically it is a noun as per lexicon.

Functionally it is an adjective as it is a modifier of

succeeding noun.

In the initial stage of ILCI data annotation, POS tagging

was conducted using the lexical approach. However, over

a while, POS tagging was done using the functional ap-

proach only. The reason is that, by using the lexical ap-

proach we do a general tagging, i.e., tagging at a surface

level or token level and by using the functional approach

we do a specific tagging, i.e., tagging at a semantic level.

This eases the annotation process of chunking and parsing

in the NLP pipeline.

While performing POS annotation, many issues and chal-

lenges were encountered, some of which are discussed be-

low. Table 1 lists the occurrences of discussed words in

the ILCI corpus.

5.1 Subordinators which act as Adverbs

There are three basic types of adverbs. They are time

(N_NST), place (N_NST) and manner (RB). Traditional-

ly, adverbs should be tagged as RB. Subordinators are

conjunctions which are tagged as CCS.

However, there are some subordinators in Marathi which

act as adverbs. For example, ामाणे (jyApramANe, like-

9

https://www.tdil-dc.in/

10

http://www.bis.gov.in/

11

http://tdil-dc.in/tdildcMain/articles/134692Draft POS Tag stan-

dard.pdf

12

https://www.cicling.org/2018/cicling18-hanoi-special-event-

23mar18.pdf

wise), ामाणे (tyApramANe, like that), ामाणे (hyA-

pramANe, like this), जेा (jevhA, when) and तेा (tevhA,

then). ामाणे (jyApramANe) and ामाणे (tyApramANe)

are generated from pronominal stems viz., ा (jyA) and

ा (hyA) hence they are lexically qualified as pronouns,

however, they function as adverbs; hence to be functional-

ly tagged as RB at the individual level. However, when

these words appear as part of the clause then they should

be functionally tagged as CCS.

This distinction was also observed by noted Marathi

grammarian, Damle

13

(Damle, 1965) [p. 206-07].

5.2 Words with Suffixes

There are suffixes like मुळे (muLe, because of; due to),

साठी (sAThI, for), बरोबर, (barobara, along with), etc.

When these suffixes are attached to pronouns they func-

tion as adverbs or conjunctions at a syntactic level. For

example, words ामुळे (tyAmuLe, because of that), यामुळे

(yAmuLe, because of this), यासाठी (yAsAThI, for this),

ामुळे (hyAmuLe, because of this), ाामुळे (hyAchyA-

muLe, because of it/him), यांामुळे (yAMchyAmuLe, be-

cause of them), ाचबरोबर (=तसेच) (tyAchabarobara

(=tasecha), further) are formed by attaching the above

suffixes to pronouns. These words which are formed are

lexically tagged as PRP. However, functionally these

words act as conjunctions at the sentence level; therefore,

they should be tagged as CCD. Also, consider the words

ावेळी (tyAveLI, at that time), ावेळी (hyAveLI, at this

time), ानंतर (hyAnaMtara, after this), ानंतर (tyAnaMta-

ra, after that). Here, wherever the first string/morpheme

appears as ा (tyA) and ा (hyA), the tag should be given

as PRP, lexically. But functionally, all these words shall

be tagged as N_NST (time adverb).

5.3 Words which are Adjectives

Adjectives are tagged as JJ. Consider the example below:

ाामे ही कला परंपरागत चालत आली आहे (tyAchyAmadhye

hI kalA paraMparAgata chAlataAlIAhe, this art has come

to him by tradition). Lexically, the word परंपरागत (pa-

raMparAgata, traditional) is an adjective, but, in the

above sentence, it qualifies the verb चालत येणे (chAla-

tayeNe, to be practiced). Hence functionally, the word

परंपरागत (paraMparAgata) should be tagged as an RB.

Similarly, a word वाईट (vAITa, bad) has a lexical POS as

an adjective (Date-Karve, 1932). But in the sentence मला

वाईट वाटते (malA vAITa vATate, I am feeling bad), it func-

tions as an adverb, as it is qualifying the verb and not pre-

ceding the pronoun मला (malA, I; me). Therefore, func-

tionally word वाईट (vAITa) acts as adverb hence should be

tagged as RB.

5.4 Adnominal Suffixes Attached to Verbs

The adnominal suffix जोगं (jogaM) and all its forms (जोगा,

जोगी, जोगे, जोा; jogA, jogI, joge, jogyA) are always at-

tached to verbs. For example, word कराजोा (karaNyA-

jogyA, doable) is lexically tagged as a verb. However,

word करा (karaNyA) is a Kridanta form of a verb करणे

(karaNe, to do) and suffix जोगं (jogaM) is an adnominal

suffix attached to Kridanta form; hence, a verb with all the

13

http://www.cfilt.iitb.ac.in/damale/index.html

2

forms of जोगं (jogaM) should functionally be treated as ad-

jectives. Therefore verbs with adnominal suffix should be

tagged as JJ.

5.5 Words जसे (jase) तसे (tase)

As per Damle (1956), words जसे (jase, like this) and तसे

(tase, like that) are tagged as adverbs. However, if they

appear with nouns in a sentence, they are influenced by

the inflection and gender property of that nominal stem.

For example, words जसे (jase, like this) and तसे (tase, like

that) have inflected forms like जसा (jasA, like him), जशी

(jashI, like her), तसा (tasA, like this), तशी (tashI, like this),

तसे (tase, like this), etc. All these words function as a rela-

tive pronoun in a sentence. Hence, the words and their

variations should be functionally tagged as PRL.

5.6 Word तसेतर (tasetara)

A word तसेतर (tasetara, as it is seen) is the same as तसे

पािहले तर (tase pAhile tara, as it is seen). Lexically, it can

be tagged as a particle (RPD) but since it has a function of

conjunction; it should be tagged as CCD. For example, in

a sentence तसेतर तणावामुळेही काळी वलय येतात (tasetara ta-

NAvAmuLehI kALI valaya yetAta, as it is seen that black

circles appear because of stress as well), word तसेतर (tase-

tara) functions as conjunction and hence should be tagged

as CCD instead of tagging it as RPD.

5.7 Word अथा (anyathA)

The standard dictionaries give POS of the word अथा

(anyathA, otherwise; else; or) as an adverb/indeclinable.

For example, consider a sentence अथा तो येणार नाही (any-

athA to yeNAra nAhI, Otherwise, he will not come). Here,

while annotating अथा (anyathA) there is a possibility

that annotator can directly tag this word as an adverb at a

lexical level. However, it behaves like conjunction at the

sentence level and hence it should be tagged as CCD.

5.8 Different Forms of कसा (kasA)

As per BIS Tag-set, words कसा, कशी, कसे (kasA, kashI,

kase; how) shall be tagged as PRQ. However, the PRQ tag

is only for pronoun category and the word कसा (kasA) is

not a pronoun; it can behave as an adverb or as a modifier.

Consider the examples below:

1. तो माणूस कसा आहे हे ााशी बोलावरच कळे ल

(to mANUsa kasA Ahe he tyAchyAshI bolalyA-

varacha kaLela, we will come to know about him

only after talking to him) [adnominal]

2. सरकारी ठरावाने कायाचे कलम कसे र होणार

(sarakArI TharAvAne kAyadyAche kalama kase

radda hoNAra, How can this clause of law be

prohibited by Government Resolution?) [adver-

bial]

In the 1st case, word कसा (kasA, how) functionally acts

as a pronoun, hence to be tagged as PRQ. While, in the

2nd case, it acts as an adverb, hence to be functionally

tagged as RB.

5.9 Word मा (mAtra)

A word मा (mAtra) is very ambiguous in its various

usages; it is difficult to functionally identify the POS of

this word at a sentence level. Various meanings of word

मा (mAtra) are given in Data-Karve dictionary

14

. Some

of the different senses of मा (mAtra) are discussed here:

When the word मा (mAtra) conveys the mean-

ing of ही, देखील, सुा (hI, dekhIla, suddhA; also)

then it should be tagged as RB functionally.

When a word is related to the preceding word तेथे

(tethe, there) and its function is an emphatic

marker च (cha) then it should be tagged as RPD

functionally.

When word मा (mAtra) appears in the form of

conjunction then it should be marked as CC

functionally.

If the word is modifying the succeeding noun,

then it should be tagged as JJ functionally.

If the word is modifying the preceding word,

then the tag will be RPD as a particle functional-

ly.

Therefore, it is noticed that the word मा (mAtra) does not

have one single POS tag functionally and it depends upon

the appearance in a sentence. Hence, should be tagged as

per the usage.

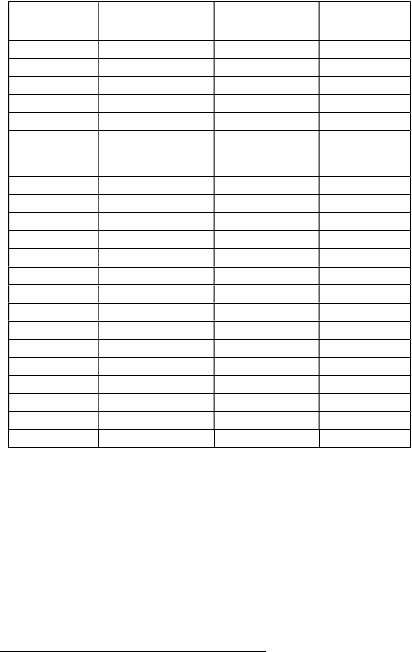

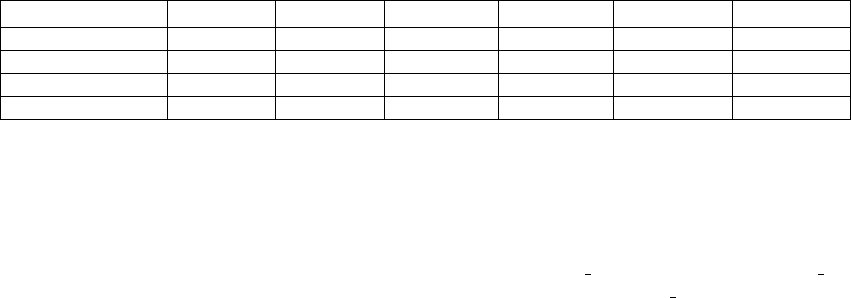

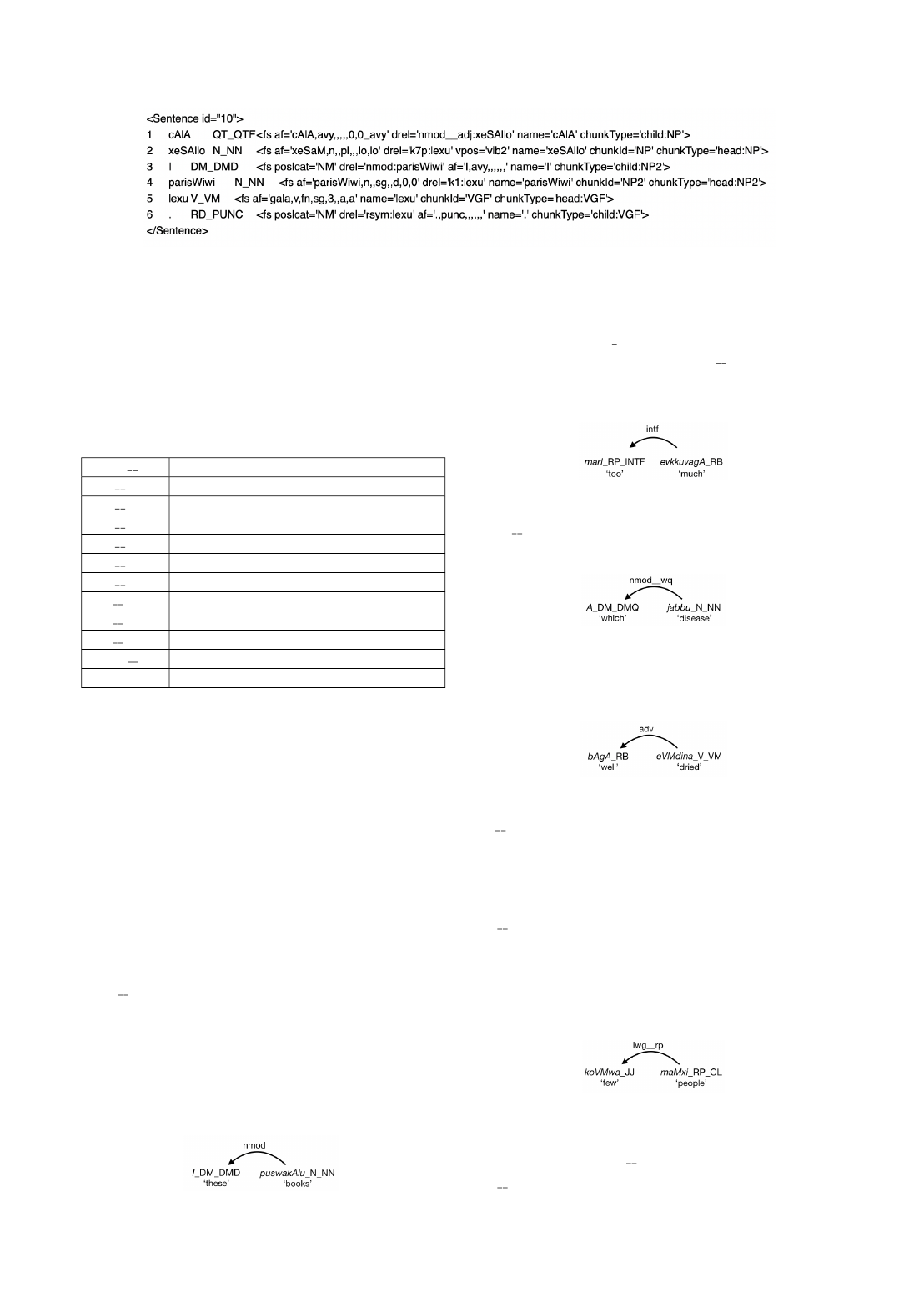

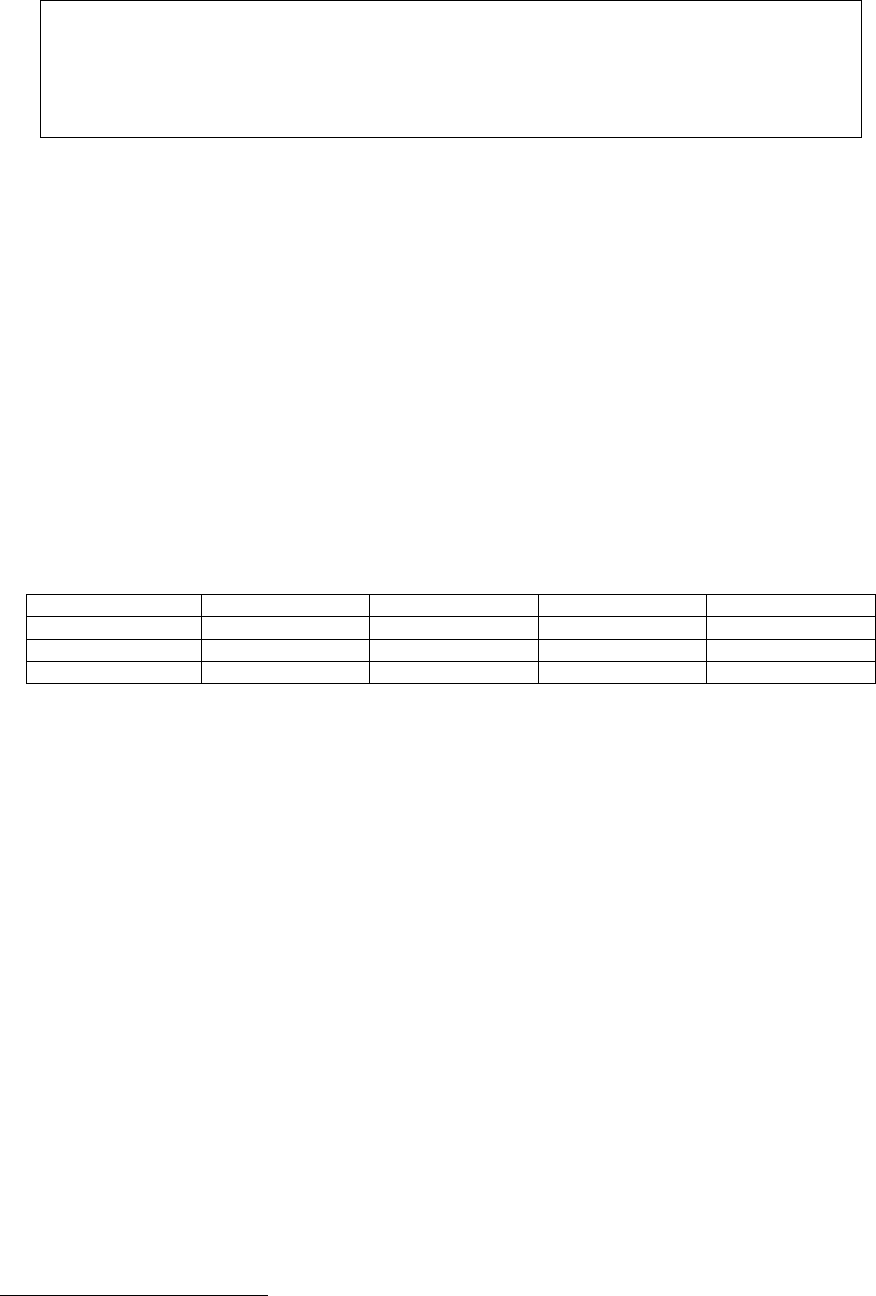

Token Lexical Functional

Occur-

rences

ामाणे

Pronoun Adverb 94

ामाणे

Pronoun Adverb 180

ामाणे

Pronoun Adverb 8

जेा

Subordinator Time adverb 1496

तेा

Subordinator Time adverb 1577

मा

Adverb

Post-position

Conjunction

Particle

426

तसेतर

Particle Conjunction 8

कसा

Wh-word Adverb 269

ामुळे

Pronoun Conjunction 530

ामुळे

Pronoun Conjunction 424

ाामुळे

Pronoun Conjunction 8

ावेळी

Pronoun Time adverb 244

ावेळी

Pronoun Time adverb 33

ानंतर

Pronoun Time adverb 71

ानंतर

Pronoun Time adverb 298

परंपरागत

Adjective Adverb 104

वाईट

Adjective Adverb 246

अथा

Adverb Conjunction 24

जसे

Relative pronoun Adverb 1007

तसे

Relative pronoun Adverb 511

कराजोा

Verb Adjective 97

Table 1: Occurrences of discussed words and lexical v/s

functional tags assigned to these words

6 POS Ambiguity: Challenges and Discus-

sions

Ambiguity is a major open problem in NLP. Several POS

level ambiguity issues were faced by annotators while an-

notating the Marathi corpus. Following are some POS

14

http://www.transliteral.org/dictionary/mr.kosh.maharasht

ra/source

3

specific ambiguity problems encountered while annotat-

ing.

6.1 Ambiguous POS: Adjective or Noun?

Examples: वयर (vayaskara, the aged)

कु टुंबाा वयर सदांनी मतदान के ले (kuTuM-

bAchyA vayaskara sadasyAMnI matadAnakele,

all the aged members of the family voted).

सव वयरांनी मतदान के ले (sarva vayaskarAMnI

matadAna kele, all the aged people voted).

In the above examples, the word वयर (vayaskarAMnI)

lexically acts as an adjective as well as a noun. However,

at the syntactic level, in the first example, it is functioning

as adjective hence to be tagged as JJ, while in the second

example it is functioning as a noun hence to be tagged as

N_NN. This is one of the challenges while annotating ad-

jectives appearing in nominal form. Annotators usually

fail to disambiguate these types of words at the lexical

level; therefore such words should be disambiguated at

syntactic level. Hence, annotators need to take special

care while annotating such cases.

6.2 Ambiguous POS: Demonstrators

While annotating demonstrators such as हा, ही, हे, तो, ती, ते

((hA, hI, he), this), (to, tI, te), that) annotators often get

confused whether to tag them as DMD or DMR. Simple

guideline can be followed is, if the demonstrator is direct-

ly following noun, then tag it as DMD, otherwise tag it as

DMR i.e., if the demonstrator is referring to previous

noun/person.

6.3 Ambiguous POS: Noun and Conjunction

Example: word कारण (kAraNa, reason; because). At se-

mantic level, the word कारण (kAraNa) has two meanings,

one is ‘a reason’ which acts as a noun and another is ‘be-

cause’ which acts as a conjunction. Annotators have to

pay special attention while tagging such cases.

6.4 Ambiguous Words: ते (te) and तेही (tehI)

The word ते (te) has different grammatical categories like

pronoun (they), demonstrator (that) and conjunction (to).

Examples:

३० ते ४० (30 te 40, 30 to 40)

The word ते (te) lexically and functionally acts as

conjunction, hence to be tagged as CCD.

ते णाले (te mhaNAle, they said)

Here word ते (te) acts as personal pronoun, hence

to be tagged as PR_PRP

ते कु ठे आहेत? (te kuThe Aheta?, where are they?)

Here word ते (te) acts as relative demonstrator,

hence to be tagged as DM_DMR

राके शने पोलीसांना फोन के ला आिण ते दोी चोर पकडले

गेले (rAkeshane polIsAMnA phona kelA ANi te

donhI chora pakaDale gele, Rakesh called police

and those two thieves got caught).

Here, word ते (te) is modifying its succeeding

noun चोर (chora, thief) so it is Deictic demonstra-

tor, hence to be tagged as DM_DMD.

ांना हे कधीच पसंत नते, ांा मुलाने संगीत िशकावे

आिण तेही नृ (tyAMnA he kadhIchapasaMtanav-

hate, tyAMchyAmulAnesaMgItashikAveANite-

hInRRitya, He never wanted his son to learn mu-

sic and that too the dance form)

Here, the word तेही (tehI) is an ambiguous word. It is mod-

ifying succeeding noun or previous context. Here, ही (hI)

is a bound morpheme and conveys the meaning ‘also’.

Therefore word तेही (tehI) should be tagged as DM_DMR.

6.5 Ambiguous word: उलटा (ulaTA)

Examples:

उलटे टांगून सुकवले जाते (ulaTe TAMgUna sukavale

jAte). Here, उलटे (ulaTe, upside down is behav-

ing as manner, not a noun, hence to be tagged as

RB.

उलटे भांडे सुलटे कर (ulaTe bhAMDe sulaTe kara).

Here उलटे (ulaTe) it is modifying succeeding

noun, hence it is an adjective, hence to be tagged

as JJ.

In the above examples, annotator should identify word

behavior in the sentence and tag accordingly.

6.6 Ambiguous words: िकतीही (kitIhI), ना का

(nA kA) and असू दे ना का (asU de nA kA)

Examples:

संगणक हा िकतीही गत िकं वा चतुर असू दे ना का, तो

के वळ तेच काम क शकतो ाची िवधी (पत)

आपाला त: मािहत आहे. (saMgaNaka hA kitIhI

pragata kiMvA chatura asU de nA kA, to kevaLa

techa kAma karU shakato jyAchI vidhI (paddha-

ta) ApalyAlA svata: mAhita Ahe¸ The computer

how much ever may be advanced and clever, it

only does that work whose method we only

know). Here, िकतीही (kitIhI, how much) is a

quantifier, hence to be tagged as QTF.

In the phrase असू दे ना का (asU de nA kA), the to-

ken ना (nA) is a part of verb असू दे (asU de, let it

be) and should be tagged as VM, hence the

phrase should be tagged as VM, while the token

का (kA) is acting as a particle in this phrase and

not as a question marker, therefore का (kA)

should be tagged as RPD.

िकती माणसे जेवायला होती? (kitI mANase jevAyalA

hotI, how many people were there for a meal?).

Here, िकती (kitI, how many) is a question so it

should be tagged as DMQ.

6.7 Ambiguous word: तर (tara)

Examples:

Conjunction: जर मी वेळीच गेलो नसतो तर हा वाचला

नसता (jara mI veLIcha gelo nasato tara hA vA-

chalA nasatA, if I had not gone on time he would

have not survived).

Particle: 'हो! आता मी जातो तर!' = 'मी अिजबात जाणार

नाही’('ho! AtA mI jAto tara!' = 'mI ajibAta jANA-

4

ra nAhI’, ‘yes! now I am leaving then’ = ‘I am

not at all leaving’).

In the above sentences, the word तर (tara) is used as a

supplementary or stressable word so somewhat spe-

cial as to give meaning in the sentence. (Date-Karve,

1932). Hence it should be treated as CCD.

तुी तर लाख पये मागतां व मी तर के वळ गरीब पडलो

(tumhI tara lAkha rupaye mAgatAM va mI tara

kevaLa garIba paDalo, you are asking for lakh

rupees and I am a poor person). In this sentence,

the word तर (tara) indicates opposition with re-

spect to meaning between two connected sen-

tences. (Date-Karve, 1932). Hence, it should be

treated as a RPD.

7 Discussions on Some Special Cases

Words आयु (Amlayukta), मलईरिहत (malaI-

rahita), मेदरिहत (medarahita), दुाळ (duSh-

kALagrasta) are combinations of noun plus ad-

jective suffix such as यु (yukta), (grasta)

and रिहत (rahita). In such cases, even though

noun is a head string and adjective part is a suf-

fix, the whole word shall be tagged as JJ.

Before tagging अभंग (abhaMga, verses), ओा

(ovyA, stanzas), का (kAvya, poetry), etc., anno-

tator shall first read between the lines; under-

stand the meaning which it conveys and then de-

cide upon the grammatical categories of each to-

ken. For example, in sentence कळावे तयासी कळे

अंतरीचे कारण ते साचे साच अंगी (kaLAve tayAsI kale

aMtarIche kAraNa te sAche sAcha aMgI) the

POS tagging should be done as साचे\V_VM

साच\N_NN अंगी\N_NN, etc.

Doubtful cases of word कोणता (koNatA)

Examples:

o कोणता मुलगा शार आहे (koNatA mulagA hu-

shAra Ahe)?

o वाहतुकीा दरान कोणतीही हानी झालेली नाही

(vAhatukIchyA daramyAna koNatIhI hAnI

jhAlelI nAhI).

o ांा बोलाचा माावर कोणताही परणाम झाला

नाही (hyAMchyA bolaNyAchA mAjhyAvara

koNatAhI pariNAma jhAlA nAhI).

o शेतक यास कोणाही वष पााची कमतरता

भासणार नाही (shetakaryAsa koNatyAhI var-

ShI pANyAchI kamataratA bhAsaNAra nA-

hI).

Here, in the 1st example, the word कोणता (koNa-

tA, which one) undoubtedly is DMQ. In rest of

the examples कोणतीही (koNatAhI, whichever,

whomever), कोणताही (koNatAhI, whichever,

whomever), कोणाही (koNatyAhI, whichever,

whomever) are DM adjective (DMD).

8 Conclusion and Future Work

Marathi POS tagging is an important activity for NLP tasks.

While tagging, several challenges and issues were encoun-

tered. In this paper, Marathi BIS tag-set has been discussed.

Lexical and functional tagging approaches were discussed

with examples. Further, various challenges, experiences,

and special cases have been presented. The issues discussed

here will be helpful for annotators, researchers, language

learners, etc. of Marathi and other languages.

In future, more issues such as tagging for words having

multiple senses; words having multiple functional tags will

be discussed. Also, tagset comparison of close languages

will be done. Further, the evaluation of lexical and func-

tional tagging using statistical analysis will be done.

References

Akshar Bharati, Dipti Misra Sharma, Lakshmi Bai, Rajeev

Sangal. (2006). AnnCorra : Annotating Corpora Guide-

lines For POS And Chunk Annotation For Indian Lan-

guages. Language Technologies Research Centre, IIIT,

Hyderabad.

Chitra V. Chaudhari, Ashwini V. Khaire, Rashmi R. Mur-

tadak, Komal S. Sirsulla. (2017). Sentiment Analysis in

Marathi using Marathi WordNet. Imperial Journal of

Interdisciplinary Research (IJIR) Vol-3, Issue-4, 2017

ISSN: 2454-1362.

Damle, Moro Keshav. (1965). Shastriya Marathi Vyakran.

A scientific grammar of Marathi, 3

rd

edition. Pune, In-

dia: RD Yande.

Daniel Jurafsky & James H. Martin. (2016). Speech and

Language Processing.

Edna Vaz, Shantaram V. Walawalikar, Dr. Jyoti Pawar, Dr.

Madhavi Sardesai. (2012). BIS Annotation Standards

With Reference to Konkani Language. 24

th

Interna-

tional Conference on Computational Linguistics (COL-

ING 2012), Mumbai.

Lata Popale and Pushpak Bhattacharyya. (2017). Creating

Marathi WordNet. The WordNet in Indian Languages.

Springer, Singapore, 2017. 147-166.

Pushpak Bhattacharyya, (2015). Machine Translation,

Book published by CRC Press, Taylor and Francis

Group, USA.

Yashwant Ramkrishna Date, Chintman Ganesh Karve,

Aba Chandorkar, Chintaman Shankar Datar. (1932).

Maharashtra Shabdakosh. Published by H. A. Bhave,

Varada Books, Senapati Bapat Marg, Pune.

5

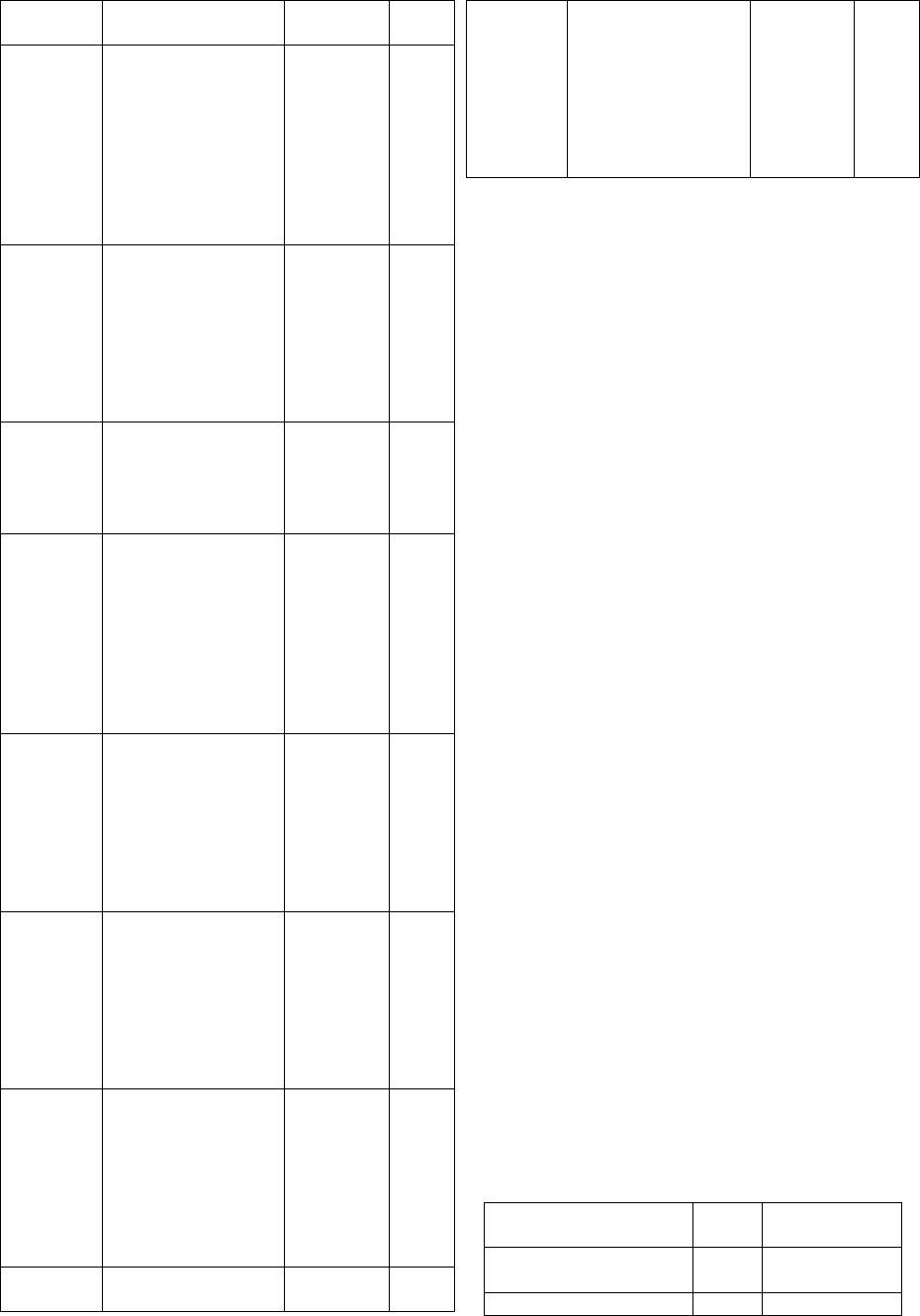

Appendix A

Marathi Parts of Speech Tag-Set with Examples

SI.

No

Category Label

Annotation

Convention

**

Examples

Top level &

Subtype

1

Noun (

नाम

)

N N

1.1

Common (जातीवाचक

नाम)

NN N_NN

गाय\N_NN गोात\N_NN राहते.

1.2 Proper (ीवाचक

नाम) NNP N_NNP रामाने\N_NNP रावणाला\N_NNP मारले.

1.3

Nloc (थल-काल)

NST N_NST

1.

तो

येथे

\N_NST

काम

करत

होता

.

2. ाने

ही

वू

खाली\N_NST ठेवली

आहे.

2

Pronoun (सवनाम)

PR PR

2.1 Personal (पुष

वाचक

) PRP PR_PRP

मी

\PR_PRP

येतो

.

2.2 Reflexive (आ

वाचक

) PRF PR_PRF

मी

तः\PR_PRF

आलो

.

2.3

Relative (संबंधी)

PRL PR_PRL

ाने\PR_PRL हे

सांिगतले

ाने

हे

काम

के ले

पािहजे.

2.4 Reciprocal (पाररक) PRC PR_PRC

परर

2.5

Wh-word (ाथक)

PRQ PR_PRQ

कोण

\PR_PRQ

येत

आहे

?

2.6 Indefinite (अिनित) PRI PR_PRI

कोणी

\PR_PRI

कोणास

\PR_PRI

हासू

नये

.

ा

पेटीत

काय\PR_PRI आहे

ते

सांगा.

3 Demonstrative (दशक)

DM DM

हे

पुक

माझे

आहे.

3.1 Deictic DMD DM_DMD

तो

\DM_DMD

मुलगा

शार

आहे

.

हा

\DM_DMD

मुलगा

शार

आहे

.

ही\DM_DMD मुलगी सुंदरआहे

.

जेथे\DM_DMD राम

होता

तेथे\DM_DMD तो

होता.

3.2 Relative DMR DM_DMR

हे

\DM_DMR

लाल

रंगाचे

असते

.

3.3 Wh-word DMQ DM_DMQ

कोणता

\DM_DMQ

मुलगा

शार

आहे

?

4

Verb (ियापद)

V V

4.1

Main (मु

ियापद)

VM V_VM

तो

घरी

गेला\V_VM.

4.2 Auxiliary (सहाक

ियापद

VAU

X

V_VAUX राम

घरी

जात

आहे\V_VAUX.

5 Adjective (िवशेषण) JJ

सुंदर\JJ मुलगी

6

Adverb (ियािवशेषण)

RB

हळूहळू \RB चाल.

7

Conjunction (उभयायी

अय)

CC CC

7.1 Coordinator CCD CC_CCD

तो

आिण

\ CC_CCD

मी

.

7.2 Subordinator CCS CC_CCS

जर

\CC_CCS ाने

सांिगतले

असते

तर

\CC_CCS

हे

काम

मी

के ले

असते.

7.2.1 Quotative UT

CC_CCS_U

T

असे\CC_CCS_UT णून\C_CCS_UT तो

पुढे

गेला.

8 Particles RP RP

8.1 Default RPD RP_RPD

मी

तर

\RP_RPD

खूप

दमले

.

8.2

Interjection (उार

वाचक)

INJ RP_INJ

अरेरे\RP_INJ ! सिचनची

िवके ट

ढापली.

8.3 Intensifier (ती

वाचक) INTF RP_INTF राम खूप\RP_INTF चांगला

मुलगा

आहे.

8.4

Negation (नकाराक)

NEG RP_NEG

नको

,

न

9

Quantifiers

QT

QT

9.1 General QTF QT_QTF

थोडी

\QT_QTF

साखर

ा.

9.2 Cardinals QTC QT_QTC

मला

एक

\QT_QTC

गोळी

दे

.

9.3 Ordinals QTO QT_QTO

माझा

पिह

ला

\QT_QTO मांक

आला

.

10

Residuals (उवरत) RD RD

10.1 Foreign word RDF RD_RDF

10.2 Symbol SYM RD_SYE $, &, *, (, ),

10.3 Punctuation

PUN

C

RD_PUNC

. (period), ,(comma), ;(semi-colon), !(exclama-

tion),? (question), : (colon), etc.

10.4 Unknown UNK RD_UNK Not able to identify the Tag.

10.5 Echo-words ECH RD_ECH

जेवण

िबवण

,

डोके

िबके

6

Proceedings of the WILDRE5– 5

th

Workshop on Indian Language Data: Resources and Evaluation, pages 7–13

Language Resources and Evaluation Conference (LREC 2020), Marseille, 11–16 May 2020

c

European Language Resources Association (ELRA), licensed under CC-BY-NC

A Dataset for Troll Classification of TamilMemes

Shardul Suryawanshi

1

, Bharathi Raja Chakravarthi

1

, Pranav Varma

2

,

Mihael Arcan

1

, John P. McCrae

1

and Paul Buitelaar

1

1

Insight SFI Research Centre for Data Analytics

1

Data Science Institute, National University of Ireland Galway

2

National University of Ireland Galway

{shardul.suryawanshi, bharathi.raja}@insight-centre.org

Abstract

Social media are interactive platforms that facilitate the creation or sharing of information, ideas or other forms of expression among

people. This exchange is not free from offensive, trolling or malicious contents targeting users or communities. One way of trolling is

by making memes, which in most cases combines an image with a concept or catchphrase. The challenge of dealing with memes is

that they are region-specific and their meaning is often obscured in humour or sarcasm. To facilitate the computational modelling of

trolling in the memes for Indian languages, we created a meme dataset for Tamil (TamilMemes). We annotated and released the dataset

containing suspected trolls and not-troll memes. In this paper, we use the a image classification to address the difficulties involved in the

classification of troll memes with the existing methods. We found that the identification of a troll meme with such an image classifier is

not feasible which has been corroborated with precision, recall and F1-score.

Keywords: Tamil dataset, memes classification, trolling, Indian language data

1. Introduction

Traditional media content distribution channels such as

television, radio or newspapers are monitored and scruti-

nized for their content. Nevertheless, social media plat-

forms on the Internet opened the door for people to con-

tribute, leave a comment on existing content without any

moderation. Although most of the time, the internet

users are harmless, some produce offensive content due to

anonymity and freedom provided by social networks. Due

to this freedom, people are becoming creative in their jokes

by making memes. Although memes are meant to be hu-

morous, sometimes it becomes threatening and offensive to

specific people or community.

On the Internet, a troll is a person who upsets or starts a

hatred towards people or community. Trolling is the activ-

ity of posting a message via social media that is intended

to be offensive, provocative, or menacing to distract which

often has a digressive or off-topic content with the intent of

provoking the audience (Bishop, 2013; Bishop, 2014; Mo-

jica de la Vega and Ng, 2018; Suryawanshi et al., 2020).

Despite this growing body of research in natural language

processing, identifying trolling in memes has yet to be in-

vestigated. One way to understand how meme varies from

other image posts was studied by Wang and Wen (2015).

According to the authors, memes combine two images or

are a combination of an image and a witty, catchy or sar-

castic text. In this work, we treat this task as an image

classification problem.

Due to the large population in India, the issue has emerged

in the context of recent events. There have been several

threats towards people or communities from memes. This

is a serious threat which shames people or spreads hatred

towards people or a particular community (Kumar et al.,

2018; Rani et al., 2020; Suryawanshi et al., 2020). There

have been several studies on moderating trolling, however,

for a social media administrator memes are hard to monitor

as they are region-specific. Furthermore, their meaning is

often obscure due to fused image-text representation. The

content in Indian memes might be written in English, in a

native language (native or foreign script), or in a mixture

of languages and scripts (Ranjan et al., 2016; Chakravarthi

et al., 2018; Jose et al., 2020; Priyadharshini et al., 2020;

Chakravarthi et al., 2020a; Chakravarthi et al., 2020b). This

adds another challenge to the meme classification problem.

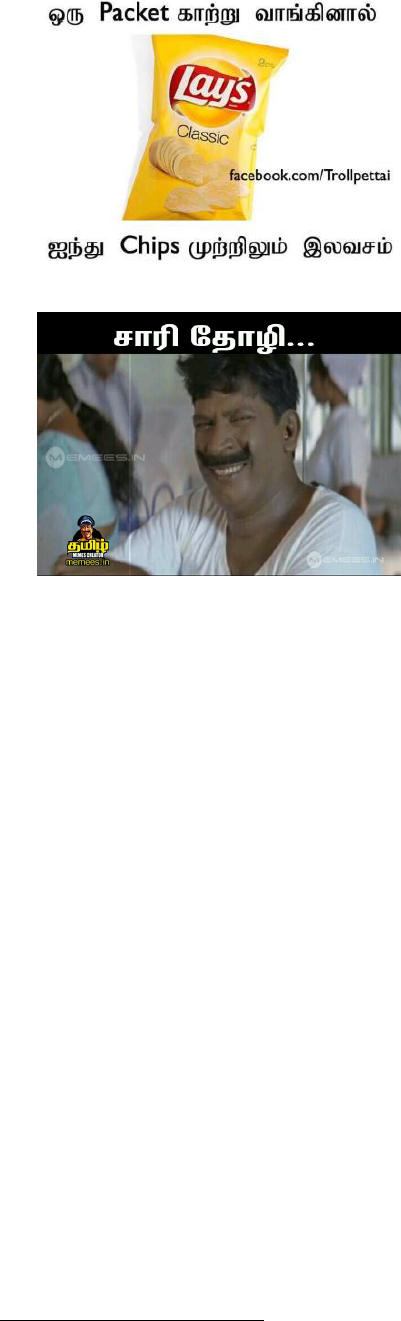

(a) Example 1

(b) Example 2

Figure 1: Examples of Indian memes.

7

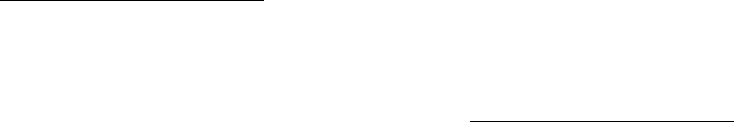

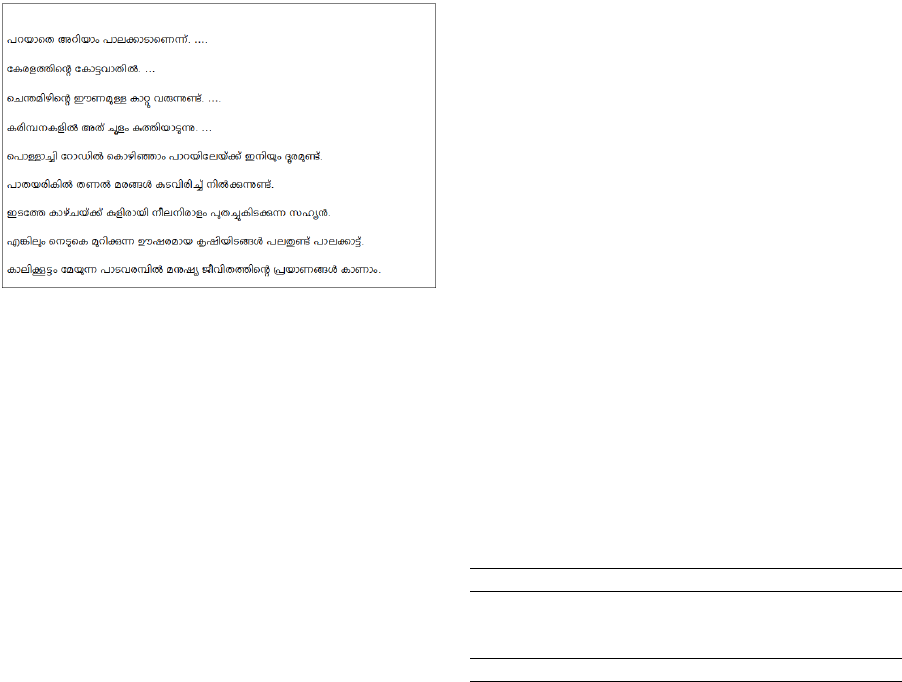

In Figure 1, Example 1 is written in Tamil with two im-

ages and Example 2 is written in English and Tamil (Roman

Script) with two images. In the first example, the meme is

trolling about the “Vim dis-washer” soap. The informa-

tion in Example 1 can be translated into English as “the

price of a lemon is five Rupees”, whereby the image be-

low shows a crying person. Just after the crying person the

text says “The price of a Vim bar with the power of 100

Lemon is just 10 Rupees”. This is an example of opinion

manipulation with trolling as it influences the user opin-

ion about products, companies and politics. This kind of

memes might be effective in two ways. On the one hand, it

is easy for companies and political parties to gain popular-

ity. On the other hand, the trolls can damage the reputation

of the company name or political party name. Example 2

shows a funny meme; it shows that a guy is talking to a

poor lady while the girl in the car is looking at them. The

image below includes a popular Tamil comedy actor with a

short text written beneath “We also talk nicely to ladies to

get into a relationship”.

Even though there is a widespread culture of memes on the

Internet, the research on the classification of memes is not

studied well. There are no systematic studies on classify-

ing memes in a troll or not-troll category. In this work,

we describe a dataset for classifying memes in such cat-

egories. To do this, we have collected a set of original

memes from volunteers. We present baseline results using

convolutional neural network (CNN) approaches for image

classification. We report our findings in precision, recall

and F-score and publish the code for this work at https:

//github.com/sharduls007/TamilMemes.

2. Troll Meme

A troll meme is an implicit image that intents to demean or

offend an individual on the Internet. Based on the defini-

tion “Trolling is the activity of posting a message via social

media that tend to be offensive, provocative, or menacing

(Bishop, 2013; Bishop, 2014; Mojica de la Vega and Ng,

2018)”. Their main function is to distract the audience with

the intent of provoking them. We define troll memes as

a meme, which contains offensive text and non-offensive

images, offensive images with non-offensive text, sarcasti-

cally offensive text with non-offensive images, or sarcastic

images with offensive text to provoke, distract, and has a

digressive or off-topic content with intend to demean or of-

fend particular people, group or race.

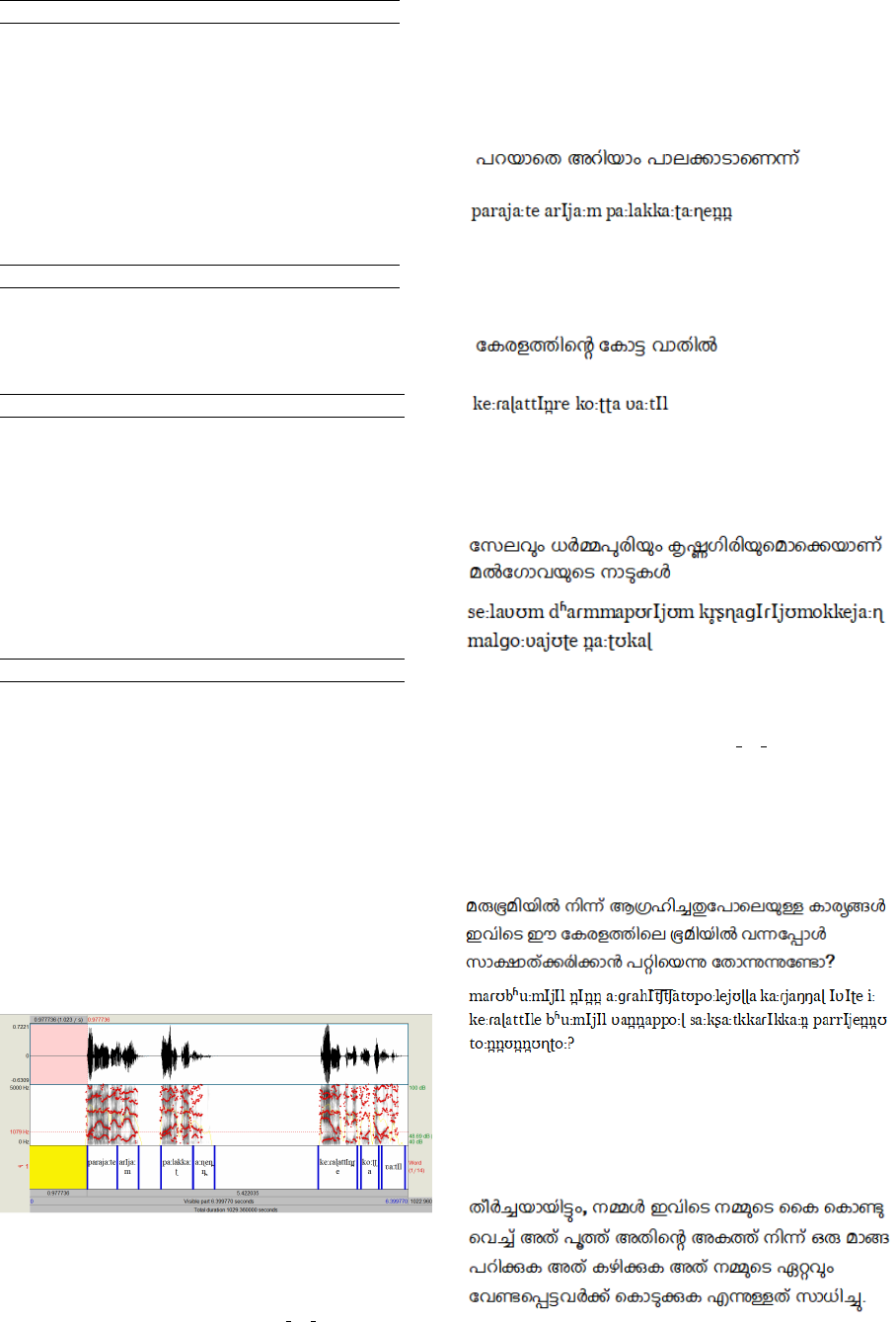

Figure 2 shows examples of trolling memes, Example 3 is

trolling the potato chip brand called Lays. The translation

of the text is “If you buy one packet of air, then 5 chips

free”, with its intention to damage the company’s reputa-

tion. Figure 2 illustrates examples of not-troll memes. The

translation of Example 4 would be “Sorry my friend (girl)”.

As this example does not contain any provoking or offen-

sive content and is even funny, it should be listed in the

not-troll category.

As a troll meme is directed towards someone, it is easy to

find such content in the comments section or group chat of

social media. For our work, we collected memes from vol-

unteers who sent them through WhatsApp, a social media

for chatting and creating a group chat. The suspected troll

(a) Example 3

(b) Example 4

Figure 2: Examples of troll and not-troll memes.

memes then have been verified and annotated manually by

the annotators. As the users who sent these troll memes be-

long to the Tamil speaking population, all the troll memes

are in Tamil. The general format of the meme is the image

and Tamil text embedded within the image.

Most of the troll memes comes from the state of Tamil

Nadu, in India. The Tamil language, which has 75 mil-

lion speakers,

1

belongs to the Dravidian language family

(Rao and Lalitha Devi, 2013; Chakravarthi et al., 2019a;

Chakravarthi et al., 2019b; Chakravarthi et al., 2019c) and

is one of the 22 scheduled languages of India (Dash et al.,

2015). As these troll memes can have a negative psycholog-

ical effect on an individual, a constraint has to be in place

for such a conversation. In this work, we are attempting to

identify such troll memes by providing a dataset and image

classifier to identify these memes.

3. Related Work

Trolling in social media for text has been studied exten-

sively (Bishop, 2013; Bishop, 2014; Mojica de la Vega

and Ng, 2018; Malmasi and Zampieri, 2017; Kumar et al.,

2018; Kumar, 2019). Opinion manipulation trolling (Mi-

haylov et al., 2015b; Mihaylov et al., 2015a), troll com-

ments in News Community (Mihaylov and Nakov, 2016),

and the role of political trolls (Atanasov et al., 2019) have

been studied. All these considered the trolling on text-only

media. However, meme consist of images or images with

text.

1

https://www.ethnologue.com/language/tam

8

A related research area is on offensive content detection.

Various works in the recent years have investigated Offen-

sive and Aggression content in text (Clarke and Grieve,

2017; Mathur et al., 2018; Nogueira dos Santos et al.,

2018; Galery et al., 2018). For images, Gandhi et al.

(2019) deals with offensive images and non-compliant lo-

gos. They have developed a computer-vision driven of-

fensive and non-compliant image detection algorithm that

identifies the offensive content in the image. They have

categorized images as offensive if it has nudity, sexually

explicit content, abusive text, objects used to promote vio-

lence or racially inappropriate content. The classifier takes

advantage of a pre-trained object detector to identify the

type of object in the image and then sends the image to

the unit which specializes in detecting objects in the image.

The majority of memes do not contain nudity or explicit

sexual content due to the moderation of social media on

nudity. Hence unlike their research, we are trying to iden-

tify troll memes by using image features derived by use of

a convolutional neural network.

Hate speech is a subset of offensive language and datasets

associated with hate speech have been collected from so-

cial media such as Twitter (Xiang et al., 2012), Instagram

(Hosseinmardi et al., 2015), Yahoo (Nobata et al., 2016),

YouTube (Dinakar et al., 2012). In all of these works, only

text corpora have been used to detect trolling, offensive, ag-

gression and hate speech. Nevertheless, for memes, there is

no such dataset. For Indian language memes, it is not avail-

able as to our knowledge. We are the first to develop a

meme dataset for Tamil, with troll or not-troll annotation.

4. Dataset

4.1. Ethics

For our study, people provided memes voluntarily for our

research. Additionally, all personal identifiable informa-

tion such as usernames are deleted from this dataset. The

annotators were warned about the trolling content before

viewing the meme, and our instructions informed them that

they could quit the annotation campaign anytime if they felt

uncomfortable.

4.2. Data collection

To retrieve high-quality meme data that would likely to in-

clude trolling, we asked the volunteers to provide us with

memes that they get in their social media platforms, like

WhatsApp, Facebook, Instagram, and Pinterest. The data

was collected between November 1, 2019, until January

15, 2019, from sixteen volunteers. We are not disclosing

any personal information of the volunteers such as gender

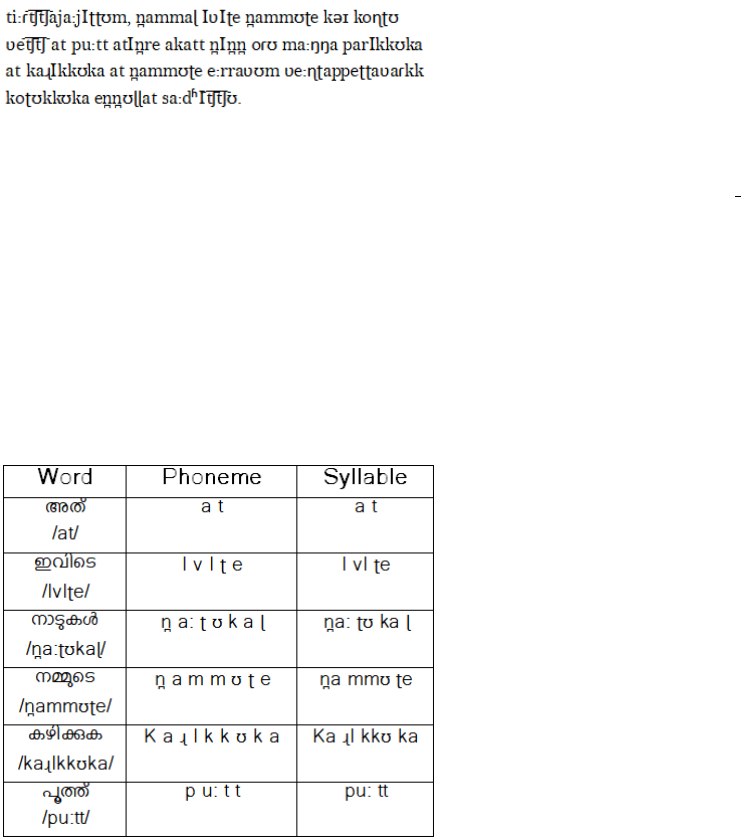

as per their will. Figure 3 shows an example of the collected

memes. We removed duplicate memes, however, we kept

memes that uses the same image but different text. This

was a challenging task since the same meme could have

different file names. Hence the same meme could be an-

notated by different annotators. Due to this, we checked

manually and removed such duplicates before sending them

to annotators. An example is shown in Figure 3, where the

same image with different text is used. Example 5 describes

the image as “can not understand what you are saying”,

whereby Example 6 describes image as “I am confused”.

(a) Example 5

(b) Example 6

Figure 3: Examples on same image with different text.

4.3. Annotation

After we obtained the memes, we presented this data to the

annotators using Google Forms. To not over-burden the

annotators, we provided ten memes per page and hundred

memes per form. For each form, the annotators are asked to

decide if a given meme is of category troll or not-troll. As a

part of annotation guidelines, we gave multiple examples of

troll memes and not-troll memes to the annotators. The an-

notation for these examples has been done by the an anno-

tator who is considered as a expert as well as a native Tamil

speaker. Each meme is assigned to two different annota-

tors, a male and a female annotator. To ensure the quality

of the annotations and due to the region-specific nature of

the annotation task, only native speakers from Tamil Nadu,

India were recruited as annotators. Although we are not

disclosing the gender demographics of volunteers who pro-

vided memes, we have gender-balanced annotation since

each meme has been annotated by a male and a female.A

meme is considered as troll only when both of the annota-

tors label it as a troll.

4.4. Inter-Annotator Agreement

In order to evaluate the reliability of the annotation and

their robustness across experiments, we analyzed the inter-

annotator agreement using Cohen’s kappa (Cohen, 1960).

It compares the probability of two annotators agreeing by

chance with the observed agreement. It measures agree-

ment expected by chance by modelling each annotator with

separate distribution governing their likelihood of assigning

9

a particular category. Mathematically,

K =

p(A) − p(E)

1 − p(E)

(1)

where K is the kappa value, p(A) is the probability of

the actual outcome and p(E) is the probability of the ex-

pected outcome as predicted by chance (Bloodgood and

Grothendieck, 2013). We got a kappa value of 0.62 be-

tween two annotators (gender balance male and female an-

notators). Based on Landis and Koch (1977) and given the

inherent obscure nature of memes, we got fair agreement

amongst the annotators.

4.5. Data Statistics

We collected 2,969 memes, of which most are images with

text embedded on them. After the annotation, we learned

that the majority (1,951) of these were annotated as troll

memes, and 1,018 as not-troll memes. Furthermore, we

observed that memes, which have more than one image

have a high probability of being a troll, whereas those with

only one image are likely to be not-troll. We included

Flickr30K

2

images (Young et al., 2014) to the not-troll cat-

egory to address the class imbalance. Flickr30K is only

added to training, while the test set is randomly chosen

from our dataset. In all our experiments the test set remains

the same.

5. Methodology

To demonstrate how the given dataset can be used to clas-

sify troll memes, we defined two experiments with four

variations of each. We measured the performance of the

proposed baselines by using precision, recall and F1-score

for each class, i.e. “troll and not-troll”. We used ResNet

(He et al., 2016) and MobileNet (Howard et al., 2017) as a

baseline to perform the experiments. We give insights into

their architecture and design choices in the sections below.

ResNet

ResNet has won the ImageNet ILSVRC 2015 (Rus-

sakovsky et al., 2015) classification task. It is still a popu-

lar method for classifying images and uses residual learn-

ing which connects low-level and high-level representation

directly by skipping the connections in-between. This im-

proves the performance of ResNet by diminishing the prob-

lem of vanishing gradient descent. It assumes that a deeper

network should not produce higher training error than a

shallow network. In this experiment, we used the ResNet

architecture with 176 layers. As it was trained on the

ImageNet task, we removed the classification (last) layer

and used GlobalAveragePooling in place of fully connected

layer to save the computational cost. Later, we added

four fully connected layers with the classification layer

which has a sigmoid activation function.This architecture

is trained with or without pre-trained ImageNet weights.

MobileNet

We trained MobileNet with and without ImageNet weights.

The model has a depth multiplier of 1.4, and an input di-

mension of 224×224 pixels. This provides a 1, 280×1.4 =

2

https://github.com/BryanPlummer/flickr30k entities

1, 792 -dimensional representation of an image, which is

then passed through a single hidden layer of a dimensional-

ity of 1, 024 with ReLU activation, before being passed to

a hidden layer with input dimension of (512,None) without

any activation to provide the final representation h

p

. The

main purpose of MobileNet is to optimize convolutional

neural networks for mobile and embedded vision applica-

tions. It is less complex than ResNet in terms of number of

hyperparameters and operations. It uses a different convo-

lutional layer for each channel, this allows parallel compu-

tation on each channel which is Depthwise Separable Con-

volution. Later on the features extracted from these layers

have been combined using the pointwise convolution layer.

We used MobileNet to reduce the computational cost and

compare it with the computationally intensive ResNet.

6. Experiments

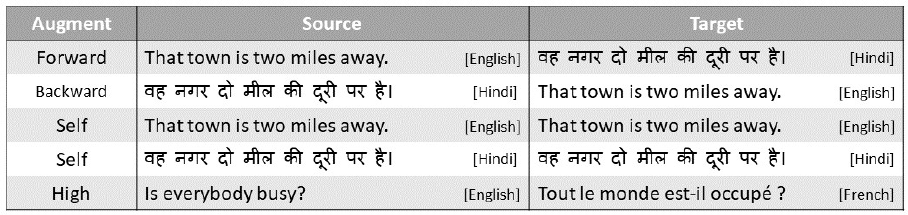

We experimented with ResNet and MobileNet. The varia-

tion in experiments comes in terms of the data on which the

models have been trained on, while the test set (300 memes)

remained the same for all experiments. In the first variation,

TamilMemes in Table 1, we trained the ResNet and Mo-

bileNet models on our Tamil meme dataset(2,669 memes).

The second variation, i.e. TamilMemes + ImageNet uses

pre-trained ImageNet weights on the Tamil memes dataset.

To address the class imbalance, we added 1,000 images

from the Flickr30k dataset to the training set in the third

variation i.e. TamilMemes + ImageNet + Flickr1k. As

a result, the third variation has 3,969 images (1,951 trolls

and 2,018 not-trolls). In the last variation, TamilMemes +

ImageNet + Flickr30k, we added 30,000 images from the

Flickr30k dataset to not-troll category. Flickr dataset has

images and the captions which describes the image. We

used these images as a not-troll category because they do

not convey trollings without the context of the text. Except

for the TamilMemes baseline, we are using pre-trained Im-

ageNet weights for all other variations. Images from the

Flickr30k dataset are used to balance the not-troll class in

the TamilMemes + ImageNet + Flickr1k variation. On the

one hand, the use of all the samples from the Flickr30k

dataset as not-troll in the fourth variation introduces the

class imbalance by significantly increasing the number of

not-troll samples compared to the troll one. On the other

hand, in the first variation, a higher number of troll meme

samples again introduces a class imbalance.

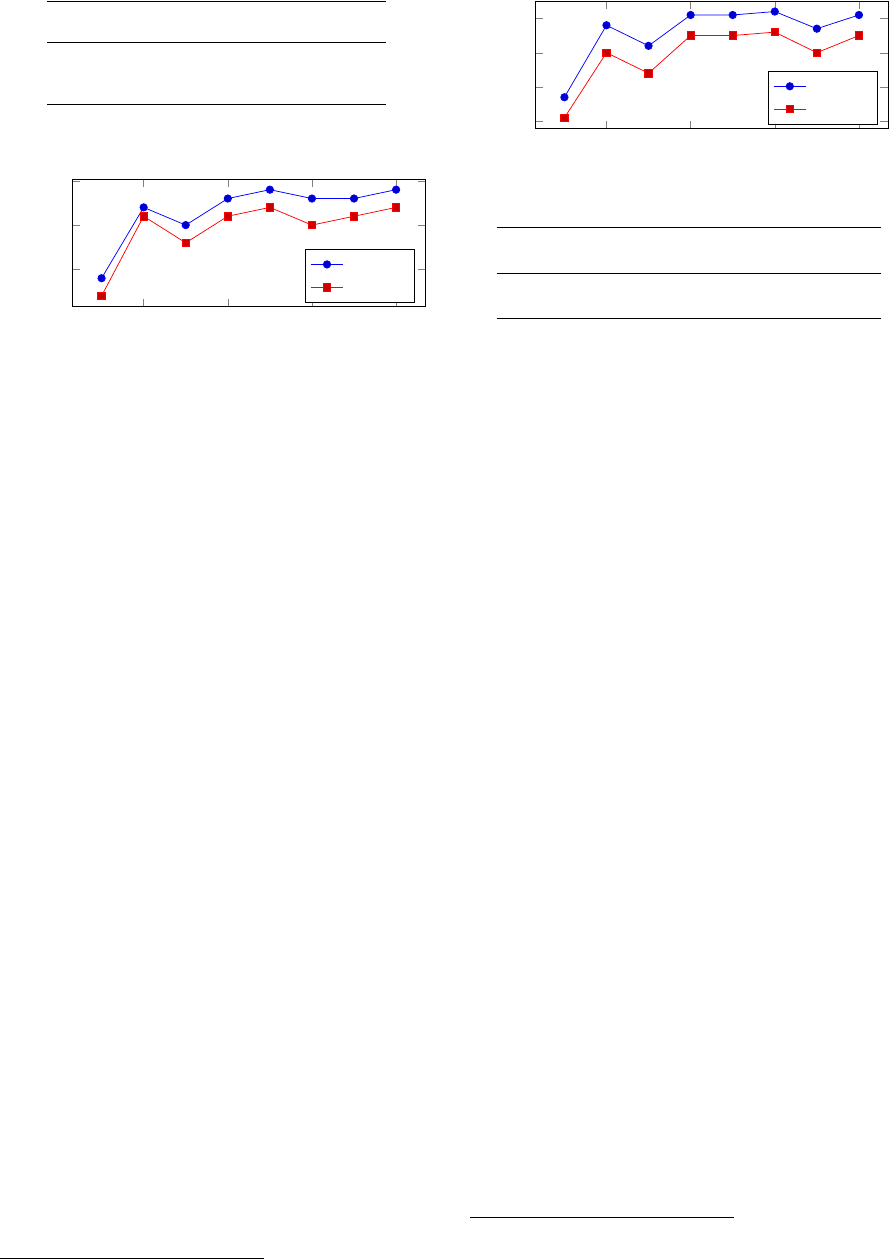

7. Result and Discussion

In the ResNet variations, we observed that there is no

change in the macro averaged precision, recall and F1-score

except for TamilMemes + ImageNet + Flickr1k variation.

This variation has relatively poor results when compared

with the other three variations in ResNet. While preci-

sion at identifying the troll class for the ResNet baseline

does not vary much, we get better precision at classify-

ing troll memes in the TamilMemes variation. This shows

that the ResNet model trained on just Tamil memes has

a better chance at identifying troll memes. The scenario

is different in the case of the MobileNet variations. On

the one hand, we observed less precision at identifying

the troll class for the TamilMemes variation. On the other

10

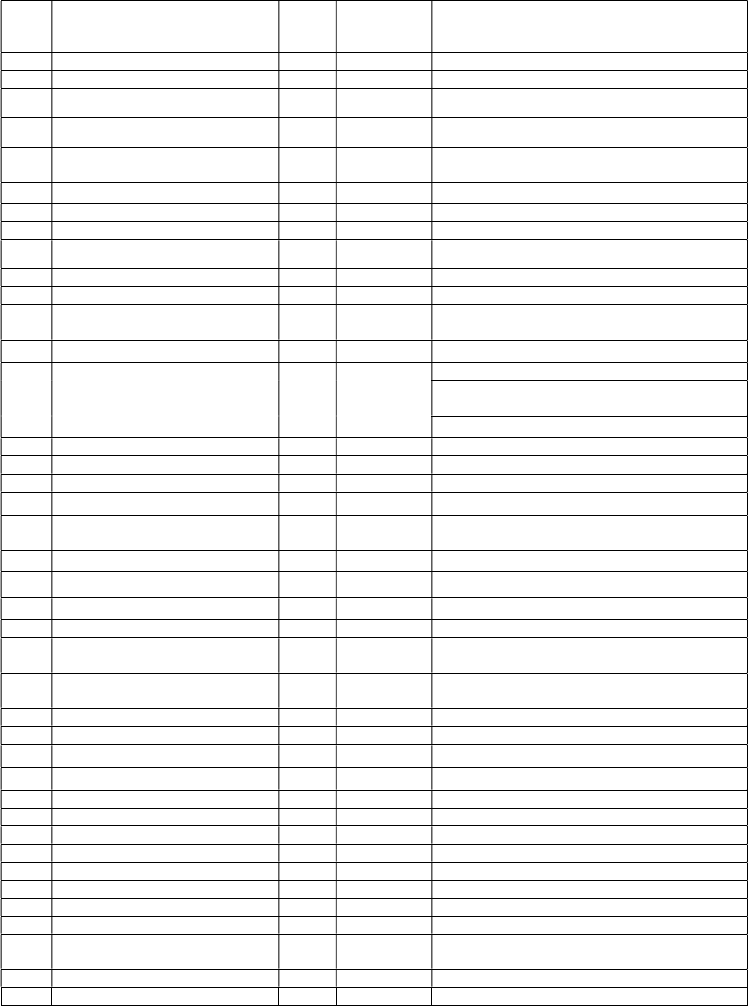

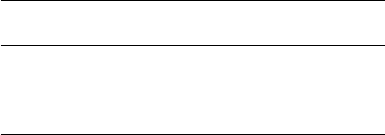

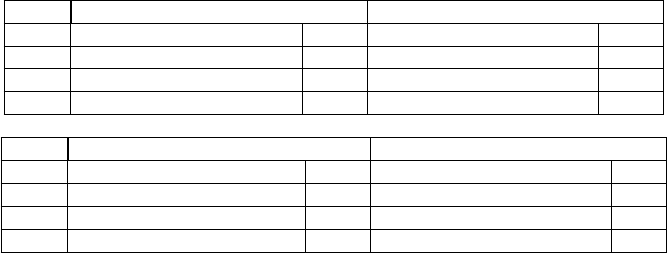

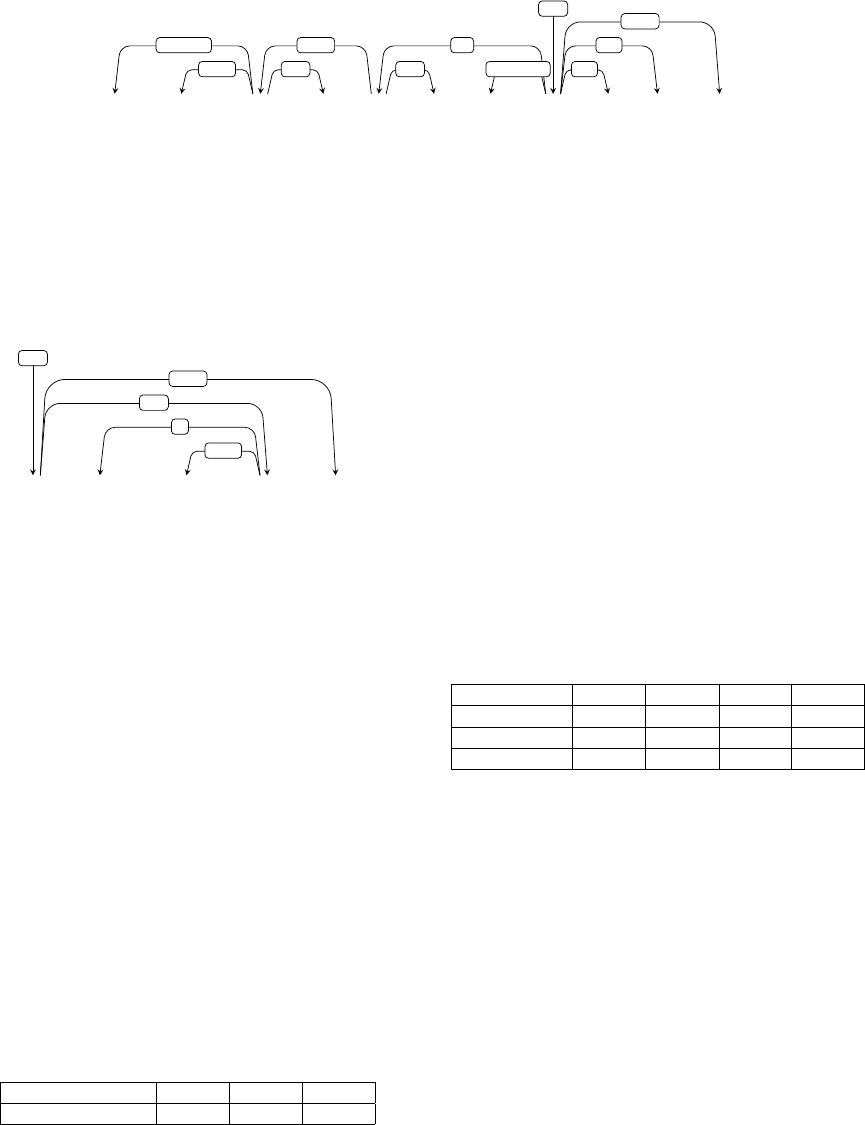

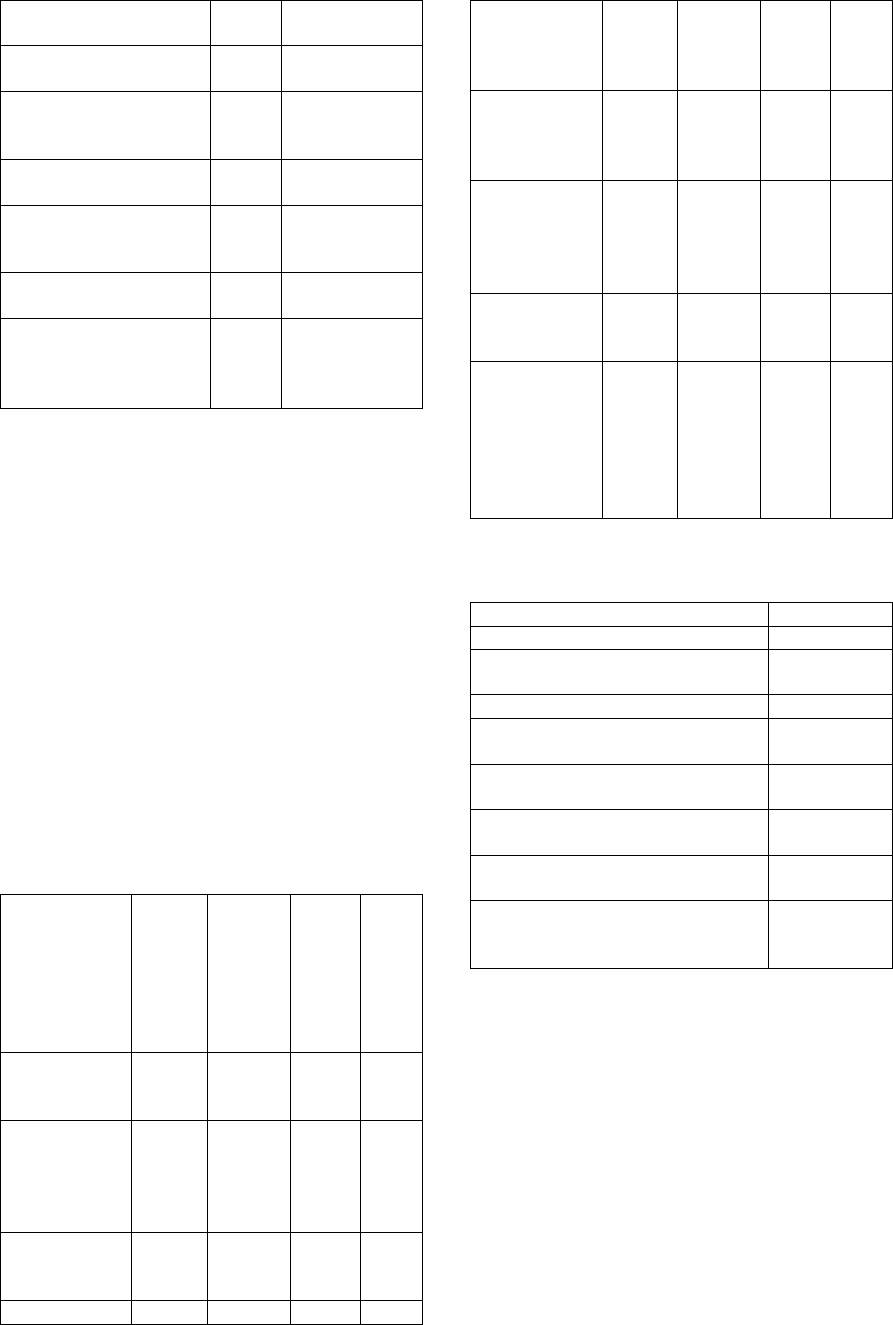

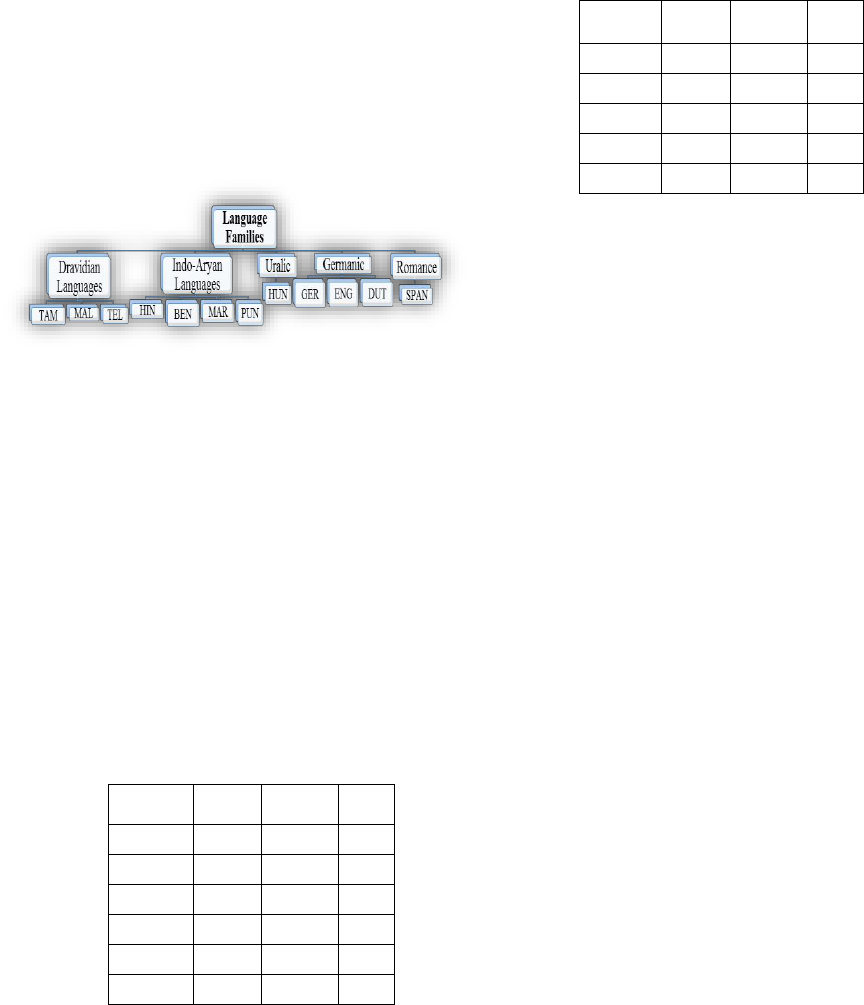

ResNET

Variations TamilMemes TamilMemes + ImageNet

Precision Recall f1-score count Precision Recall f1-score count

troll 0.37 0.33 0.35 100 0.36 0.35 0.35 100

not-troll 0.68 0.71 0.70 200 0.68 0.69 0.68 200

macro-avg 0.52 0.52 0.52 300 0.52 0.52 0.52 300

weighted-avg 0.58 0.59 0.58 300 0.57 0.57 0.57 300

Variations TamilMemes + ImageNet + Flickr1k TamilMemes + ImageNet + Flickr30k

troll 0.30 0.34 0.32 100 0.36 0.35 0.35 100

not-troll 0.64 0.59 0.62 200 0.68 0.69 0.68 200

macro-avg 0.47 0.47 0.47 300 0.52 0.52 0.52 300

weighted-avg 0.53 0.51 0.52 300 0.57 0.57 0.57 300

MobileNet

Variations TamilMemes TamilMemes + ImageNet

troll 0.28 0.27 0.28 100 0.34 0.43 0.38 100

not-troll 0.64 0.66 0.65 200 0.67 0.58 0.62 200

macro-avg 0.46 0.46 0.46 300 0.50 0.51 0.50 300

weighted-avg 0.52 0.53 0.52 300 0.56 0.53 0.54 300

Variations TamilMemes + ImageNet + Flickr1k TamilMemes + ImageNet + Flickr30k

troll 0.33 0.55 0.41 100 0.31 0.34 0.33 100

not-troll 0.66 0.45 0.53 200 0.65 0.62 0.64 200

macro-avg 0.50 0.50 0.47 300 0.48 0.48 0.48 300

weighted-avg 0.55 0.48 0.49 300 0.54 0.53 0.53 300

Table 1: Precision, recall, F1-score and count for ResNet, MobileNet and their variations.

hand, we see improvement in precision at detecting trolls

in the TamilMeme + ImageNet variation. This shows that

MobileNet can leverage transfer learning to improve re-

sults. The relatively poor performance of MobileNet on

the TamilMeme variation shows that it can not learn com-

plex features like ResNet does to identify troll memes. For

ResNet, the trend in the macro averaged score can be seen

increasing in TamilMemes + ImageNet and TamilMemes

+ ImageNet + Flickr1k variations when compared to the

TamilMemes variation. The TamilMemes + ImageNet +

Flickr30k variation shows a lower macro averaged score

than that of the TamilMemes + ImageNet + Flickr1k vari-

ation in both MobileNet and ResNet. Overall the preci-

sion for troll class identification lies in the range of 0.28

and 0.37, which is rather less than that of the not-troll

class which lies in the range of 0.64 and 0.68. When

we train ResNet in class imbalanced data in TamilMemes

and TamilMemes + ImageNet + Flickr30k variations, re-

sults shows that the macro-averaged score of these varia-

tions are not hampered by the class imbalance issue. While

for same variations MobileNet shows poor macro-averaged

precision and recall score when compared with other vari-

ations. This shows that MobileNet is more susceptible to

class imbalance issue than ResNet.

8. Conclusions and Future work

As shown in the Table 1 the classification model performs

poorly at identifying of troll memes. We observed that

this stems from the problem characteristics of memes. The

meme dataset is unbalanced and memes have both im-

age and text embedded to it with code-mixing in different

forms. Therefore, it is inherently more challenging to train

a classifier using just images. Further, the same image can

be used with different text to mean different things, poten-

tially making the task more complicated.

To reduce the burden placed on annotators, we plan to use a

semi-supervised approach to the size of the dataset. Semi-

supervised approaches have been proven to be of good use

to increase the size of the datasets for under-resourced sce-

narios. We plan to use optical character recognizer (OCR)

followed by a manual evaluation to obtain the text in the im-

ages. Since Tamil memes have code-mixing phenomenon,

we plan to tackle the problem accordingly. With text iden-

tification using OCR, we will be able to approach the prob-

lem in a multi-modal way. We have created a meme dataset

only for Tamil, but we plan to extend this to other languages

as well.

Acknowledgments

This publication has emanated from research supported in

part by a research grant from Science Foundation Ireland

(SFI) under Grant Number SFI/12/RC/2289 P2, co-funded

by the European Regional Development Fund, as well as

by the H2020 project Pr

ˆ

et-

`

a-LLOD under Grant Agreement

number 825182.

Bibliographical References

Atanasov, A., De Francisci Morales, G., and Nakov, P.

(2019). Predicting the role of political trolls in social

media. In Proceedings of the 23rd Conference on Com-

putational Natural Language Learning (CoNLL), pages

1023–1034, Hong Kong, China, November. Association

for Computational Linguistics.

Bishop, J. (2013). The effect of de-individuation of the in-

ternet troller on criminal procedure implementation: An

11

interview with a hater - proquest. International journal

of cyber criminology, page 28–48.

Bishop, J. (2014). Dealing with internet trolling in politi-

cal online communities: Towards the this is why we can’t

have nice things scale. Int. J. E-Polit., 5(4):1–20, Octo-

ber.

Bloodgood, M. and Grothendieck, J. (2013). Analysis of

stopping active learning based on stabilizing predictions.

In Proceedings of the Seventeenth Conference on Com-

putational Natural Language Learning, pages 10–19,

Sofia, Bulgaria, August. Association for Computational

Linguistics.

Chakravarthi, B. R., Arcan, M., and McCrae, J. P. (2018).

Improving Wordnets for Under-Resourced Languages

Using Machine Translation. In Proceedings of the 9th

Global WordNet Conference. The Global WordNet Con-

ference 2018 Committee.

Chakravarthi, B. R., Arcan, M., and McCrae, J. P. (2019a).

Comparison of Different Orthographies for Machine

Translation of Under-Resourced Dravidian Languages.

In Maria Eskevich, et al., editors, 2nd Conference

on Language, Data and Knowledge (LDK 2019), vol-

ume 70 of OpenAccess Series in Informatics (OASIcs),

pages 6:1–6:14, Dagstuhl, Germany. Schloss Dagstuhl–

Leibniz-Zentrum fuer Informatik.

Chakravarthi, B. R., Arcan, M., and McCrae, J. P. (2019b).

WordNet gloss translation for under-resourced languages

using multilingual neural machine translation. In Pro-

ceedings of the Second Workshop on Multilingualism at

the Intersection of Knowledge Bases and Machine Trans-

lation, pages 1–7, Dublin, Ireland, August. European As-

sociation for Machine Translation.

Chakravarthi, B. R., Priyadharshini, R., Stearns, B., Jaya-

pal, A., S, S., Arcan, M., Zarrouk, M., and McCrae,

J. P. (2019c). Multilingual multimodal machine transla-

tion for Dravidian languages utilizing phonetic transcrip-

tion. In Proceedings of the 2nd Workshop on Technolo-

gies for MT of Low Resource Languages, pages 56–63,

Dublin, Ireland, August. European Association for Ma-

chine Translation.

Chakravarthi, B. R., Jose, N., Suryawanshi, S., Sherly,

E., and McCrae, J. P. (2020a). A sentiment analy-

sis dataset for code-mixed Malayalam-English. In Pro-

ceedings of the 1st Joint Workshop of SLTU (Spoken

Language Technologies for Under-resourced languages)

and CCURL (Collaboration and Computing for Under-

Resourced Languages) (SLTU-CCURL 2020), Marseille,

France, May. European Language Resources Association

(ELRA).

Chakravarthi, B. R., Muralidaran, V., Priyadharshini, R.,

and McCrae, J. P. (2020b). Corpus creation for senti-

ment analysis in code-mixed Tamil-English text. In Pro-

ceedings of the 1st Joint Workshop of SLTU (Spoken

Language Technologies for Under-resourced languages)

and CCURL (Collaboration and Computing for Under-

Resourced Languages) (SLTU-CCURL 2020), Marseille,

France, May. European Language Resources Association

(ELRA).

Clarke, I. and Grieve, J. (2017). Dimensions of abusive

language on Twitter. In Proceedings of the First Work-

shop on Abusive Language Online, pages 1–10, Vancou-

ver, BC, Canada, August. Association for Computational

Linguistics.

Cohen, J. (1960). A Coefficient of Agreement for Nom-

inal Scales. Educational and Psychological Measure-

ment, 20(1):37.

Dash, N. S., Selvraj, A., and Hussain, M. (2015). Gen-

erating translation corpora in Indic languages:cultivating

bilingual texts for cross lingual fertilization. In Proceed-

ings of the 12th International Conference on Natural

Language Processing, pages 333–342, Trivandrum, In-

dia, December. NLP Association of India.

Dinakar, K., Jones, B., Havasi, C., Lieberman, H., and Pi-

card, R. (2012). Common sense reasoning for detection,

prevention, and mitigation of cyberbullying. ACM Trans.

Interact. Intell. Syst., 2(3), September.

Galery, T., Charitos, E., and Tian, Y. (2018). Aggres-

sion identification and multi lingual word embeddings.

In Proceedings of the First Workshop on Trolling, Ag-

gression and Cyberbullying (TRAC-2018), pages 74–79,

Santa Fe, New Mexico, USA, August. Association for

Computational Linguistics.

Gandhi, S., Kokkula, S., Chaudhuri, A., Magnani, A., Stan-

ley, T., Ahmadi, B., Kandaswamy, V., Ovenc, O., and

Mannor, S. (2019). Image matters: Detecting offen-

sive and non-compliant content/logo in product images.

arXiv preprint arXiv:1905.02234.

He, K., Zhang, X., Ren, S., and Sun, J. (2016). Deep

residual learning for image recognition. In 2016 IEEE

Conference on Computer Vision and Pattern Recogni-

tion, CVPR 2016, Las Vegas, NV, USA, June 27-30, 2016,

pages 770–778.

Hosseinmardi, H., Mattson, S. A., Rafiq, R. I., Han, R., Lv,

Q., and Mishra, S. (2015). Analyzing labeled cyberbul-

lying incidents on the instagram social network. In Inter-

national conference on social informatics, pages 49–66.

Springer.

Howard, A. G., Zhu, M., Chen, B., Kalenichenko, D.,

Wang, W., Weyand, T., Andreetto, M., and Adam, H.

(2017). Mobilenets: Efficient convolutional neural net-

works for mobile vision applications. arXiv preprint

arXiv:1704.04861.

Jose, N., Chakravarthi, B. R., Suryawanshi, S., Sherly, E.,

and McCrae, J. P. (2020). A survey of current datasets

for code-switching research. In 2020 6th International

Conference on Advanced Computing and Communica-

tion Systems (ICACCS).

Kumar, R., Ojha, A. K., Malmasi, S., and Zampieri, M.

(2018). Benchmarking aggression identification in so-

cial media. In Proceedings of the First Workshop on

Trolling, Aggression and Cyberbullying (TRAC-2018),

pages 1–11, Santa Fe, New Mexico, USA, August. As-

sociation for Computational Linguistics.

Kumar, R. (2019). # shutdownjnu vs# standwithjnu: A

study of aggression and conflict in political debates on

social media in india. Journal of Language Aggression

and Conflict.

Landis, J. R. and Koch, G. G. (1977). The measurement

of observer agreement for categorical data. Biometrics,

12

33(1):159–174.

Malmasi, S. and Zampieri, M. (2017). Detecting hate

speech in social media. In Proceedings of the Interna-

tional Conference Recent Advances in Natural Language

Processing, RANLP 2017, pages 467–472, Varna, Bul-

garia, September. INCOMA Ltd.

Mathur, P., Shah, R., Sawhney, R., and Mahata, D.

(2018). Detecting offensive tweets in Hindi-English

code-switched language. In Proceedings of the Sixth In-

ternational Workshop on Natural Language Processing

for Social Media, pages 18–26, Melbourne, Australia,

July. Association for Computational Linguistics.

Mihaylov, T. and Nakov, P. (2016). Hunting for troll com-

ments in news community forums. In Proceedings of the

54th Annual Meeting of the Association for Computa-

tional Linguistics (Volume 2: Short Papers), pages 399–

405, Berlin, Germany, August. Association for Compu-

tational Linguistics.

Mihaylov, T., Georgiev, G., and Nakov, P. (2015a). Find-

ing opinion manipulation trolls in news community fo-

rums. In Proceedings of the Nineteenth Conference on

Computational Natural Language Learning, pages 310–

314, Beijing, China, July. Association for Computational

Linguistics.

Mihaylov, T., Koychev, I., Georgiev, G., and Nakov, P.

(2015b). Exposing paid opinion manipulation trolls. In

Proceedings of the International Conference Recent Ad-

vances in Natural Language Processing, pages 443–450,

Hissar, Bulgaria, September. INCOMA Ltd. Shoumen,

BULGARIA.

Mojica de la Vega, L. G. and Ng, V. (2018). Modeling

trolling in social media conversations. In Proceedings

of the Eleventh International Conference on Language

Resources and Evaluation (LREC 2018), Miyazaki,

Japan, May. European Language Resources Association

(ELRA).

Nobata, C., Tetreault, J., Thomas, A., Mehdad, Y., and

Chang, Y. (2016). Abusive language detection in online

user content. In Proceedings of the 25th international

conference on world wide web, pages 145–153.

Nogueira dos Santos, C., Melnyk, I., and Padhi, I. (2018).

Fighting offensive language on social media with unsu-

pervised text style transfer. In Proceedings of the 56th